Systematic Planning, Statistical Analyses, and Costs

The following sections describe the process and considerations involved for DU planning, including statistical analysis and cost estimates.

3.1 Systematic Planning and DU Design

Section 3.1.1 through Section 3.1.5 provides a summary of the key aspects of systematic planning and DU design in relation to the collection of soil and sediment samples. Section 3.1.6 provides three examples that illustrate the application of these key aspects of planning for different types of environmental problems:

- an agricultural field, settling pond, and drainage swale being assessed using screening criteria (Example 1)

- a former agricultural field being converted to residential use (Example 2)

- a former industrial facility that is to be redeveloped, with human health and ecological endpoints (Example 3)

3.1.1 Overview

As with any such sampling event, characterization must generate data in three dimensions so that data needs are met for a range of technical users who participate in the site investigation process. This means collecting data to inform each step of an environmental investigation, including source area identification, evaluation of contaminant fate and transport, and assessment of potential exposure and risks.

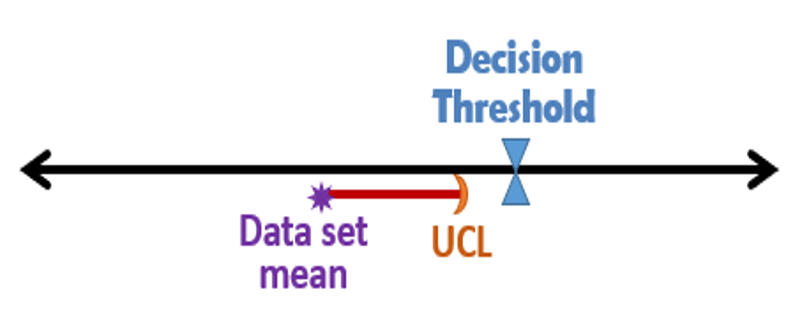

ISM-related planning guidance is consistent with USEPA’s DQO guidance (USEPA 2002c), primarily utilizing the first four steps of the DQO process: problem formulation (step 1), identify study goals (step 2), identify information inputs (step 3), and define study boundaries (step 4). Some material associated with step 5 (develop the analytic approach) and step 7 (develop the plan for obtaining data) is also provided in relation to the examples used to demonstrate systematic planning and DU design with ISM. Use of ISM in conjunction with statistical hypothesis tests, which is the focus of DQO steps 5 and 6, is taken up in Example 2 and addressed in detail in Section 3.2.

Note that implementation of ISM does not require that the DQO process be followed. However, to ensure that data obtained during environmental investigations are adequate for their intended purposes, it is strongly recommended that data collection activities be planned and developed through a systematic planning process (SPP) with end users, including the development and consideration of a CSM. Establishing clear objectives at the beginning of the investigation is crucial to efficient and effective site characterization. As described in this section, the outcome of good systematic planning is well-thought-out DUs and SUs (see Section 2.5.1.2), whose locations and dimensions produce information to support all the investigation questions.

USACE’s technical project planning (TPP) process (USACE 1998) provides another example of a systematic planning framework that can readily be used with ISM. More recently, the DQO process has been integrated in the manual for implementation of the Uniform Federal Policy for Quality Assurance Project Plans (USEPA 2005b).A list of guidance documents that can be used with ISM in addition to the DQO, TPP, and uniform federal policy (UFP)-QAPP guidance describing planning processes is provided below:

- “Technical Guidance Manual for the Implementation of the Hawai’i State Contingency Plan” (HDOH 2017b)

- “Improving Environmental Site Remediation Through Performance-Based Environmental Management” (ITRC 2007a)

- “Best Management Practices: Use of Systematic Project Planning Under a Triad Approach for Site Assessment and Cleanup” (USEPA 2010)

- “Triad Implementation Guide” (ITRC 2007b)

3.1.2 DQO step 1: problem formulation (what is the problem, and what decisions need to be made?)

The basic aspects of problem formulation, including establishing the project planning team and developing the CSM, are not unique to investigations employing ISM. When a project team is considering inclusion of ISM as a project tool in the first step of systematic planning, they should consider how ISM might fit into answering related study questions during development of the CSM by calling upon the expertise of a multi-disciplined team (including, for example, chemistry, data analysis, engineering, field sampling, geology, QA, modeling, regulatory, risk assessment, soil science, statistics, and toxicology experts). Important aspects of a CSM for supporting systematic planning are described below, with particular emphasis on applying a CSM for ISM. Additional information on developing and applying CSMs is provided in Section 3 of ITRC’s human health risk assessment guidance (ITRC 2015). USACE Engineer Manual 200-1-12, “Conceptual Site Models,” also provides examples of several different types of CSMs and their use(USACE 2012).

CSMs are essential elements of the SPP for complex environmental problems. They serve to conceptualize the relationships among contaminant sources, environmental fate and transport mechanisms, potential exposure media, and the potential routes of exposure to these media for human and ecological receptors. The structured organization of information to form a CSM creates both a summary of the current understanding of site conditions and anticipates future conditions in a manner that can help the project team identify data gaps in the information needed to make project decisions. These gaps are the basis of study goals (sampling objectives) in the next step of the planning process. In this sense, certain study goals can be thought of as hypotheses related to the CSM, and so achieving sampling objectives serves the purpose of increasing confidence in the CSM.

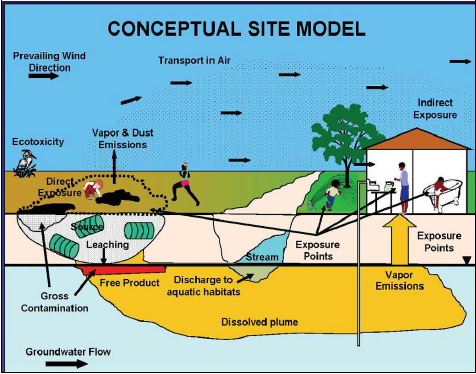

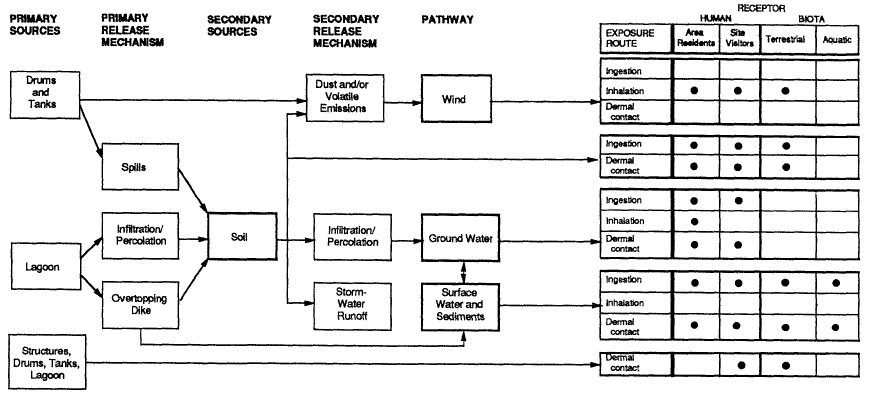

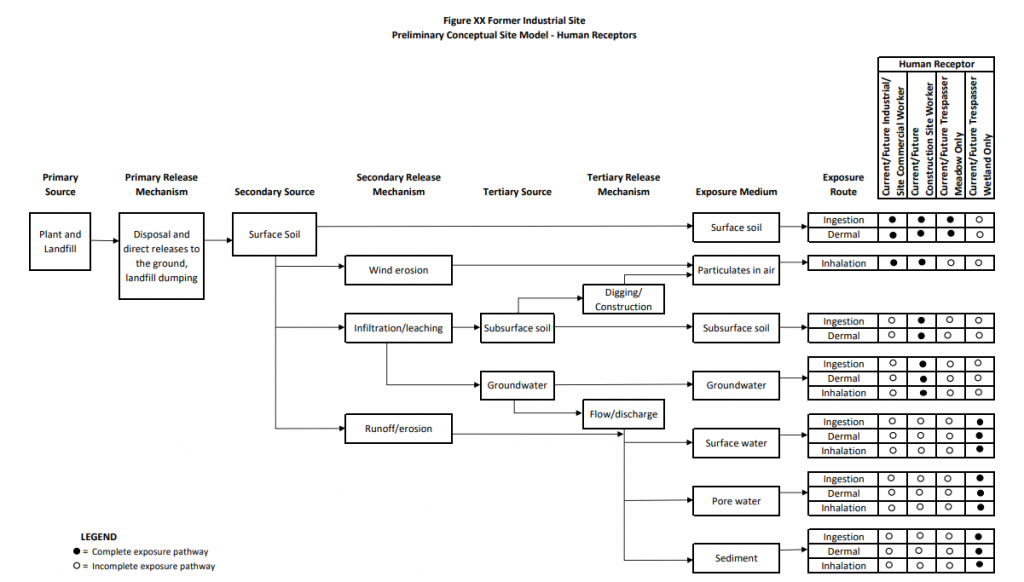

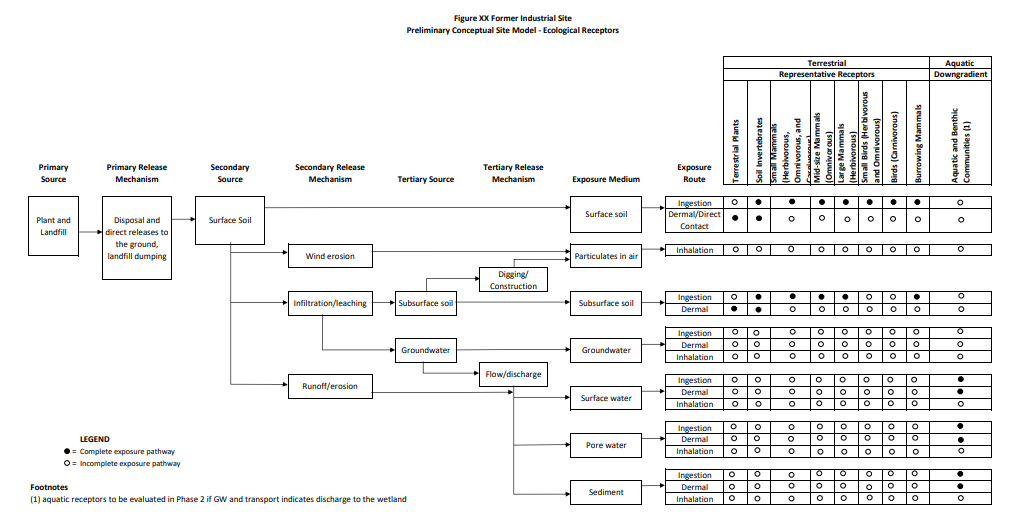

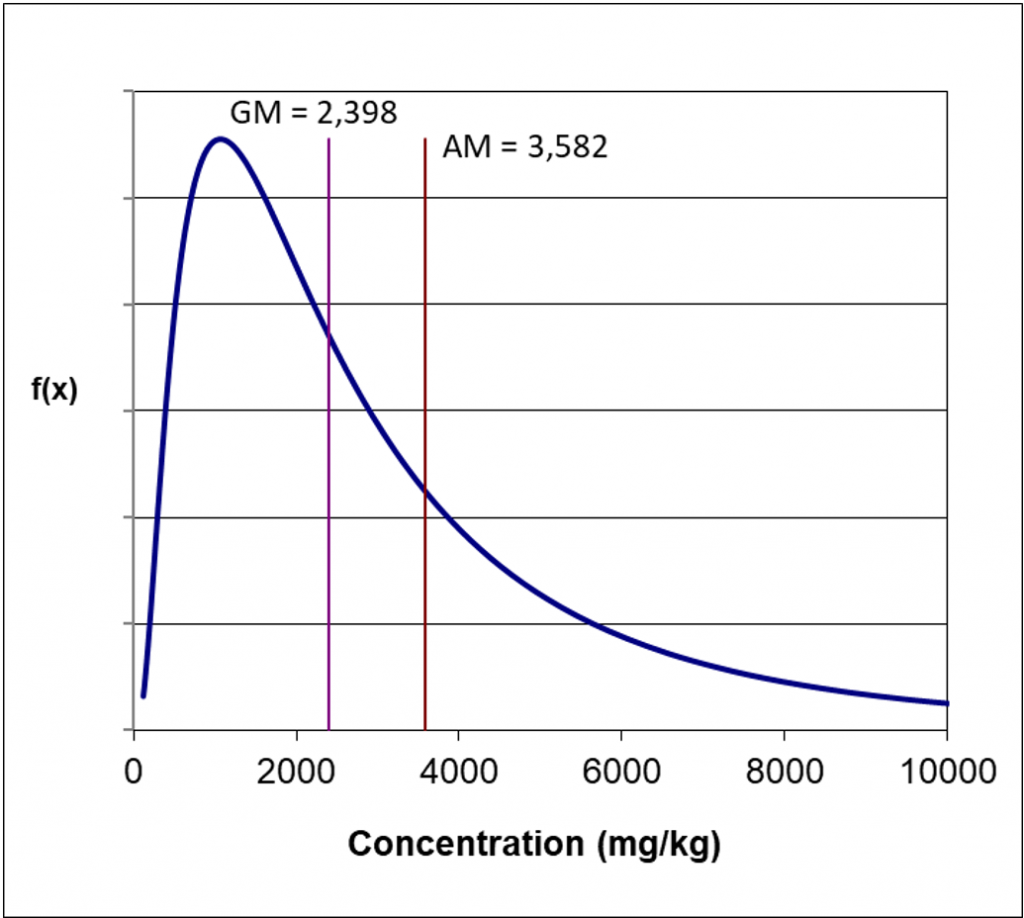

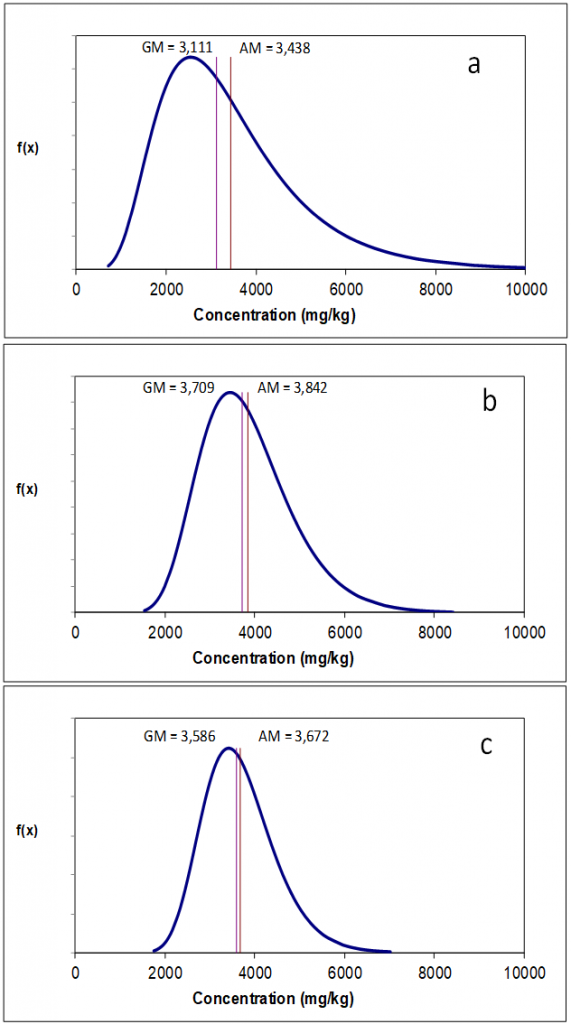

In addition to a narrative description of these component relationships, a CSM commonly includes pictorial and/or graphical representations of the components of the exposure pathway analysis. Figure 3-1 provides an example of a pictorial CSM depicting a contaminated source area and the pathways through which that contamination travels to reach human health and ecological receptors. Another CSM is rendered in graphical format in Figure 3-2. The pictorial representation of a CSM, such as the example in Figure 3-1, can be particularly useful in risk communication with stakeholders. The graphical depiction, shown in Figure 3-2, is particularly useful for framing study goals and the related inputs and boundaries for supporting site-specific risk assessment. See additional examples in Figures 3-14a and 3-14b.

The CSM may also include summaries of available environmental data, information pertaining to source terms such as listings and quantities of process chemicals, and preliminary transport modeling results.

Source: ITRC ISM-1 Team, 2012.

Source: USEPA, 2011.

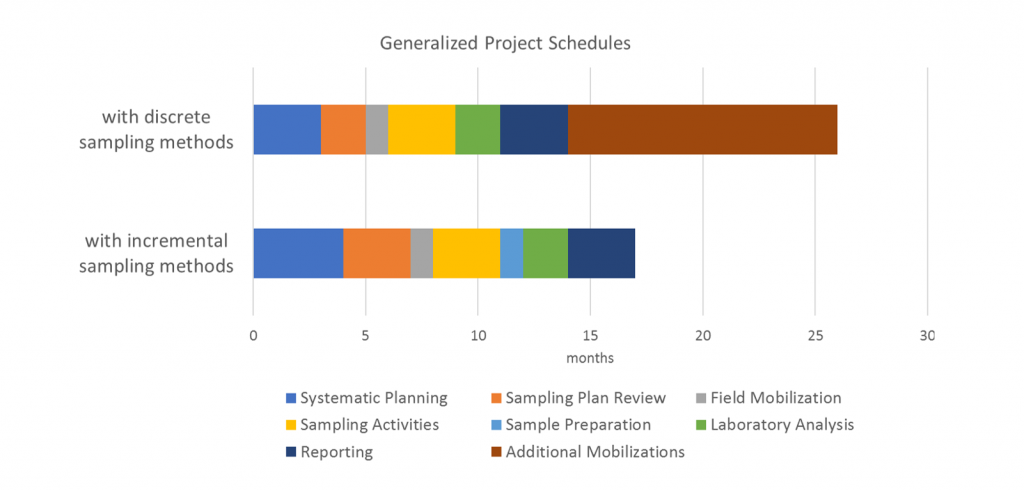

Decisions about the general sampling approach for a project are crucial in ensuring the data will be adequate to meet project objectives. Project planners may elect to employ ISM or traditional discrete sampling, or even a combination of the two, although these data are not directly comparable and cannot be easily combined (see Section 6.2.5 and Section 6.2.6). The optimum approach depends on the CSM, the sampling objectives, and how the data are to be used. In addition to the technical considerations associated with selecting among different sampling approaches, project planners must also consider relevant regulatory requirements, as well as resource, time, and budget limitations.

Investigation objectives can change as projects progress, which means new information and objectives must continually be reconsidered over the course of the project. Consideration of dynamic or iterative sampling strategies is as essential for ISM as it is for discrete sampling. An example of responding to changing conditions could include establishing additional or alternative DUs to better understand the distribution of contaminant concentrations at a site or to assist in the design and selection of remedial options, based on a review of initial data. Specifically, such a case could involve a relatively large area that was initially thought to be clean and then determined to be heavily contaminated. In this situation, it becomes cost-beneficial to resample subareas in hopes of isolating the contamination and reducing remediation costs. Generally, if DUs are designated in a well-thought-out manner with clear decision statements regarding how the data will be used to answer investigation questions, this will minimize the need for additional unexpected sample collection.

| The CSM is essential for DU design. Determining the size, shape, location, depth, and number of DUs and SUs is a critical component of the planning process and is a function of the CSM, the related study objectives, and ultimately the decision mechanisms that relate to the problem formulation. |

All contaminant concentrations in soil are heterogeneous on some scale (see Section 2), thus the determination of the sampling scale and the related increment density is very important in all sampling situations. If a finer resolution of contaminant distribution is needed to address the objectives of the investigation, then smaller DUs should be considered. Some basic questions that might be considered include, “How do the definitions of DUs and SUs fit to the study goals of the investigation?” and, “How will the resulting data be used in decision-making to solve the environmental problem?” The designation of DUs and SUs should support and clarify the objectives of the investigation. As the investigation proceeds, if study questions are refined or new questions arise, the DUs, SUs, and decision mechanisms should be reevaluated to ensure they will support the decisions that need to be made.

3.1.3 DQO step 2: identifying study goals (what types of additional information do we need?)

As the goals of the study are defined, the project team should consider the suitability of ISM for meeting those goals. ISM is particularly suited to decision problems related to average soil or sediment concentrations. Through the collection of a large number of increments from multiple locations and a relatively large sample mass, ISM provides better coverage and a more robust estimate of average concentrations in a volume of soil than is usually achieved with discrete or traditional composite samples. This is particularly important when contaminant concentrations are believed to be near an action level (AL) or decision threshold, or to resolve disagreements among stakeholders.

Following the identification of the study problem and the development of a CSM, the next step in systematic planning is to identify study goals. This is accomplished by developing principal study questions, based upon the CSM, which, when answered, will allow the user to address the study problem identified in step 1. These questions can vary widely and may be different for different phases of the investigation process within a single project, for example:

- Does soil contamination exist (what is the nature of contamination), and if so, has the extent of soil contamination been delineated?

- Does the average concentration of one or more soil contaminants within the investigation area (IA) present unacceptable risk?

These types of questions can be successfully addressed using ISM. Because ISM is applicable to defined volumes of soil and sediment, it is an ideal tool for assessing risks from soils/sediment, comparing site concentrations to regulatory thresholds or other criteria, bulk material characterization for disposal, or other such problems requiring a high degree of confidence in contaminant concentration in a defined volume of soil/sediment. ISM can also be effective for documenting the presence or absence of significant contamination and establishing whether patterns or trends exist within an IA because it allows the user to efficiently obtain information across a large area.

Once the principal study questions have been developed, the user can develop alternative actions, which are logical responses to each potential outcome of the study question phrased as a decision rule. The process of developing alternative actions allows the project team to develop a consensus-based approach at the onset of the investigation, which minimizes the possibility of disagreements further along in the process. Examples of study questions and decision rules relating to hypothetical site investigations and remedial response are provided in Section 3.1.6.

3.1.4 DQO step 3: identifying information inputs (what are the specific inputs for the missing Information we need to evaluate the study goals?)

Having considered ISM during the formulation of the problem to be solved (step 1) and the decisions to be made (step 2), the project team is in a position to state what information is needed and whether/how the ISM methodology can provide some or all of the data needs pertaining to soil and sediment concentrations. It is in this context that the project team should begin to examine and develop ideas pertaining to the attributes of DUs for ISM sampling.

Project teams may need to identify SUs, or the subdivisions of DUs from which separate ISM samples are collected. The boundaries of an SU indicate the coverage of a single ISM sample –SUs define the scale of the ISM sampling and concentration estimation, whereas DUs define the scale of the decision(s) based on that sampling. These definitions allow for the possibility that ISM samples from several SUs composing a DU can be used collectively to make the decision on that DU. It is also possible to employ SUs to address sampling objectives that do not have a clearly associated DU, such as when sampling to evaluate trends in concentrations with distance or depth from a source. Indeed, information from such sampling may itself be used as an input to redefine a DU’s boundaries. The final criterion of whether an area sampled using ISM is an SU or a DU is whether or not ISM samples from only that area will be used to support a decision.

| SUs define the scale of the ISM sampling and concentration estimation, whereas DUs define the scale of the decision(s) based on that sampling. |

One application of SUs is to collect information about average soil contaminant concentrations in subareas of a DU where soil concentrations are suspected to differ based on the CSM. Similarly, SUs might be used to distinguish subareas of a DU where exposure intensity is expected to differ. In either case, the DU is divided into multiple SUs, each of which is separately sampled with one or more ISM samples. Examples related to the use of SUs in such a manner are provided in Section 3.1.6. A general discussion of the concept of stratification in sampling design is provided in Section 2.5.3.1.

SUs may be advantageously used when sampling a very large area where, due to costs or other limitations, sampling 100% of the footprint of a DU is impossible. For example, a 100-acre DU might be sampled by randomly placing fifteen 1-acre SUs within the DU boundary. In this situation, the SU data are treated in an analogous manner as data from traditional composites or discrete samples to estimate the mean within the DU. As discussed in Section 3.2, ISM data can often be treated as any other data with respect to environmental statistics. An example of how a large DU can be sampled with SUs in this manner is provided in Section 3.1.6.

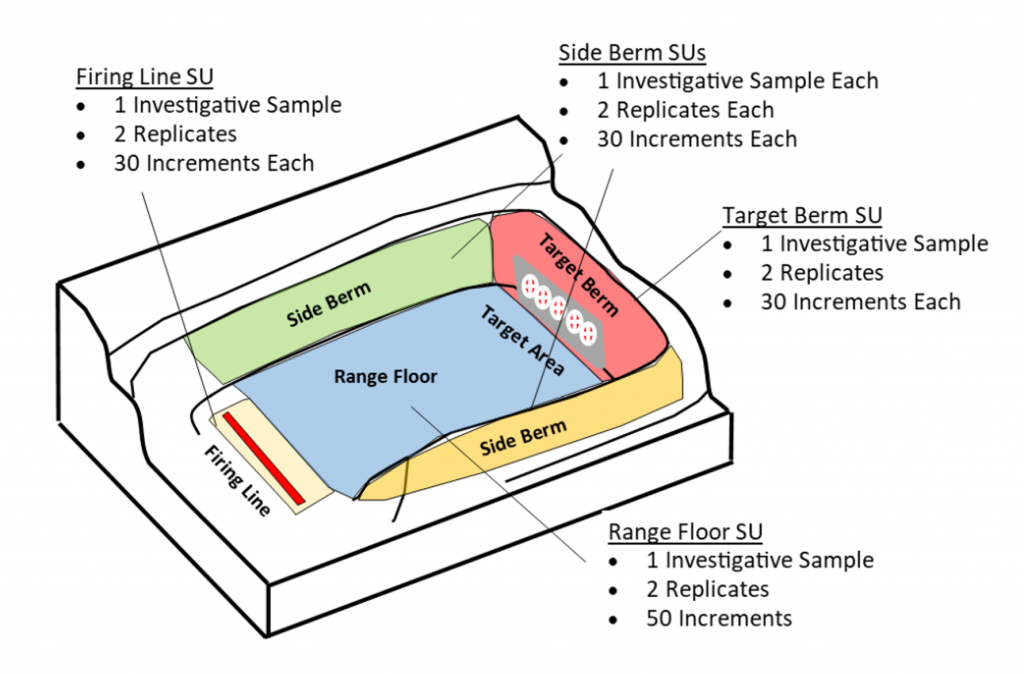

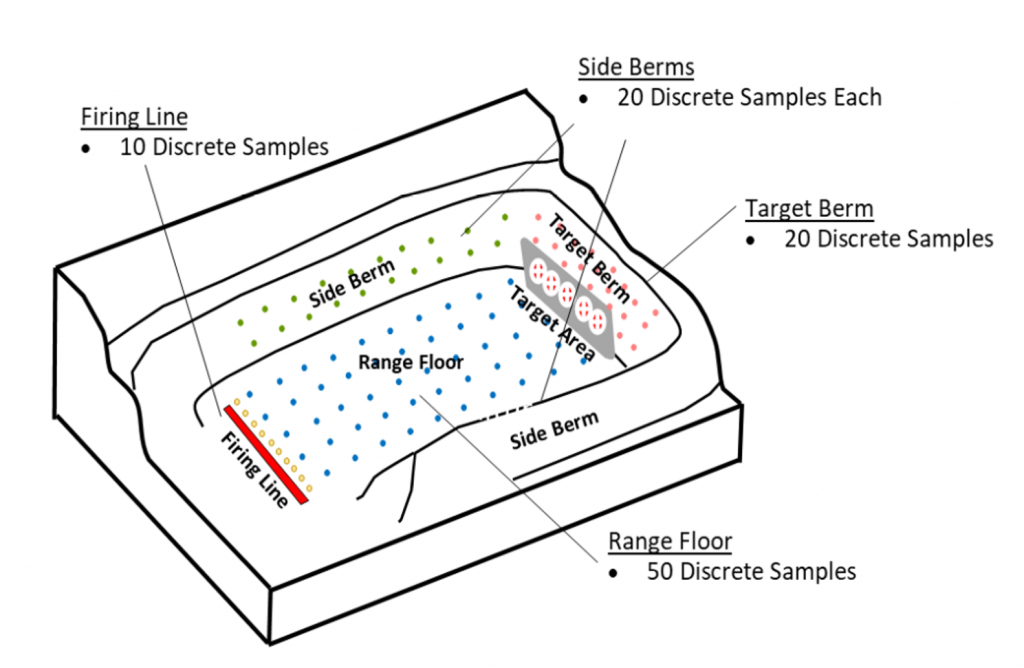

Caution should be used when applying SUs in ISM study designs. As with other ISM sampling designs, the sizing of DUs should be based on the expected scale of heterogeneity in contaminant concentrations. For example, using a large DU containing non-contiguous SUs may be appropriate to characterize a site where contamination is uniformly distributed based on the CSM, such as aeolian mercury contamination from a power plant or a metals background study. However, such an approach may not be appropriate for a munitions site where range features (target areas, firing lines, and so on) are or were present at the time the contamination was released. For such sites, DUs should be defined for each area representing a unique release profile to aid in site characterization of the nature and extent (N&E). The area and depth of the SU are presumed or already demonstrated through pilot studies to have relatively homogeneous contaminant concentrations that are the results of similar source release mechanisms or dispersion mechanisms.

3.1.5 DQO step 4: define study boundaries (what are the appropriate spatial and temporal boundaries for evaluating the study goals?)

This part of Section 3.1 is the most specific for understanding how to define the number, locations, and dimensions of SUs and DUs to achieve both study goals and support site decisions. The definition of study boundaries for ISM is addressed in the context of informing two interrelated questions that were introduced in Section 3.1.3 as the main objectives of soil and sediment sampling: what is the N&E of contamination, and what is the average contaminant concentration in some defined area?

To address the interdependency of these objectives with ISM, they will be addressed from the premise that understanding patterns of contamination in impacted media as part of an adequate site characterization will assist in designating DU sizes and boundaries. The overarching goal is to determine representative soil contaminant concentrations at a scale that is appropriate for decision-making. For either objective, preliminary data from ISM replicates on the variability of contaminant concentrations can be used to guide delineation of DUs and decisions on the number of increments needed to meet the study goals.

3.1.5.1 Study boundaries related to estimating average soil concentrations in a DU

There are two primary types of DUs that pertain most directly to a study goal of estimating the mean within a defined area: those based on the known locations and dimensions of source areas, called source area DUs or nature and extent DUs (N&E DUs), and those based on the known locations and dimensions of areas within which human or ecological receptors are randomly exposed, called exposure area DUs or simply EUs. In both cases, the primary objective of sampling is to estimate mean contaminant concentrations within a defined volume of soil.

A source area is defined as a discernible volume of soil (or waste or other solid media) containing elevated or potentially elevated concentrations of contaminant in comparison to the surrounding soil such as:

- areas with stained soil, known contamination, or obvious releases

- areas where contaminants were suspected to be stored, handled, or disposed

- areas where sufficient sampling evidence indicates elevated concentrations relative to the surrounding soil over a significant volume of contaminated media

N&E DUs are differentiated from exposure area DUs in that the boundaries of N&E DUs and the scale of sampling are based on a reasonably well-known extent of contamination, while the boundaries of exposure area DUs are determined through the exposure assumptions of the receptors in the risk scenario.

N&E DUs. Source areas are of concern in an environmental investigation because contamination can migrate from source areas to other locations and media (such as leaching to groundwater, volatilizing to soil gas and/or indoor air, overland transport, or running off to surface water), and also because direct exposure to source area contamination may be of concern. The identification and characterization of source areas is an important part of any environmental investigation. N&E DUs can be identified by using various methods, including observation, review of site records, preliminary samples, field analytical samples, wide-area assessments, aerial photographs, interviews, and site surveys. Ideally, source areas are identified based on knowledge of the site before DU designation and subsequent ISM sampling. However, source areas can also be discovered through the interpretation of sampling results.

As discussed in Section 3.1.4, it may be advisable to designate smaller N&E DUs or SUs within larger DUs based on an understanding of potential contaminant distributions. Assessment of a smaller subarea might be motivated by knowledge of site history or topography that could influence fate and transport, leading to an area where concentrations are higher relative to the surrounding soil (that is, a secondary source area). A common example of an N&E DU within a larger DU relates to the investigation of lead soil concentrations in the yards of homes known or suspected to be contaminated with lead-based paint chips. An area around the perimeter of the house might be designated as a separate DU and characterized separately from a larger DU consisting of the entire yard. This is illustrated with an example in Section 3.1.6.2, Example 2B.

Exposure area DUs. Exposure area DUs, or EUs, are a fundamental part of many environmental investigations and are a key tool in risk assessments and risk-based decision-making. An EU in the context of ISM is defined as an area where human or ecological receptors could come into contact with contaminants in soil on a regular basis (refer to exposure area discussion in “Risk Assessment Guidance for Superfund, Vol. I, Human Health Evaluation Manual (Part A)” and “Ecological Risk Assessment Guidance for Superfund; Process for Designing and Conduction Ecological Risk Assessments” (USEPA 1997).

The concentration data collected from an EU can be used to screen risk by using published criteria or to otherwise assess risk to human and ecological receptors. The data are commonly used to develop EPCs, which are generally estimates of the average concentration of a contaminant within the EU. When the remedial decision is to be based on risk assessment results, the EU should represent the area (and depths) where exposure has a high probability of occurring. The size and placement of EUs depend on current use or potential future use of the site, as well as the types of receptors that are expected for each of the land use scenarios. When systematic planning considers soil and sediment data collection to support risk assessment or risk-based decision-making, a primary question is, “Over which area and depth do samples need to be taken to reasonably represent potential exposures of concern?” An EU is commonly a spatially contiguous area within which a human or ecological receptor is generally assumed to be exposed over time in a random manner, and this random pattern of exposure is the basis for using the average to represent the EPC. Practically, we rarely know with a high degree of confidence what the exact size and location of a future exposure area is going to be, although we can make reasonable assumptions or reference default values for certain types of land use. This uncertainty regarding future exposure is why it is important to consider both source areas (based on the known or inferred spatial pattern of contamination) and likely exposure areas in developing DUs.

Lastly, although it is common and practical to discuss EUs based primarily on area, the nature of soil sampling requires that we also consider depth when defining an EU. If, for example, an exposure model states that the activities of humans or burrowing animals might reach a certain depth, then the average soil concentration from the ground surface to that depth is of interest. But here it is especially important to recognize that although, for example, humans could excavate soil to a depth corresponding to a basement, we do not necessarily know they will or what the exact location and volume of the excavation will be. If contamination is surficial, it will generally be inappropriate to assume that future excavation will certainly result in dilution of the contamination through mixing with clean subsurface soil. These ideas concerning EUs are illustrated with examples in Section 3.1.6.

3.1.5.2 Study boundaries related to evaluating the N&E of contamination

ISM can be used to determine the N&E of contamination in soil and sediment at contaminated sites. This section addresses the use of ISM to evaluate the vertical and lateral extent of contamination, and to identify subareas of elevated soil concentrations. The use of ISM in conjunction with field screening tools is also briefly discussed.

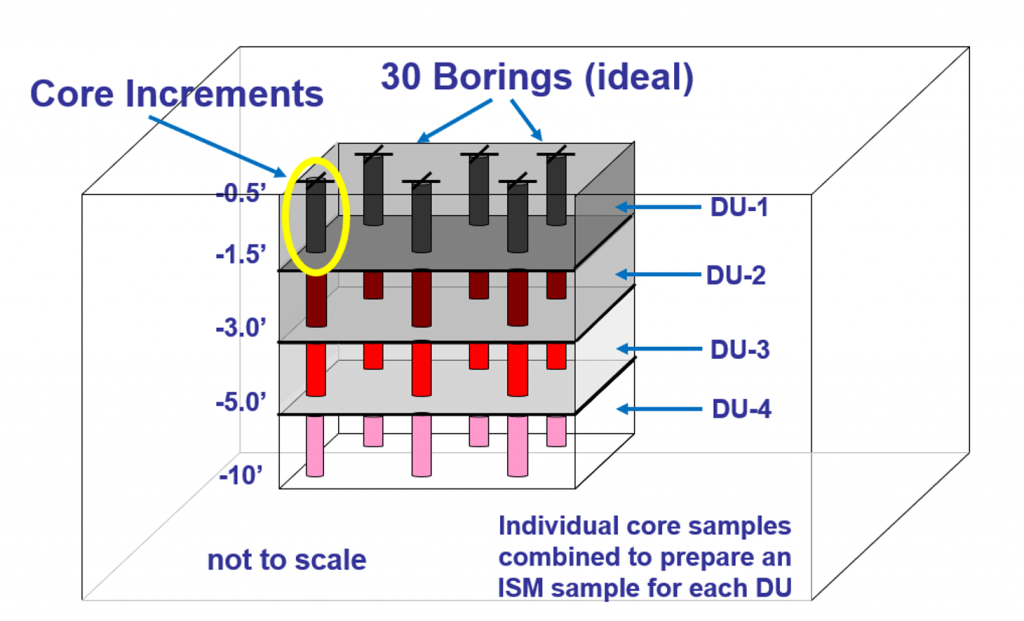

Evaluating the vertical extent of contamination with ISM. Subsurface DUs are an important application of ISM sampling because of the frequency with which subsurface contamination is encountered. In some situations, contamination may be situated entirely below the ground surface. Subsurface DUs are often tabular shaped, like thin books, and the number and thickness of these vertical intervals must be carefully considered based on the CSM, site geology/hydrology, potential receptors, existing data, and applicable state regulation and guidance. Objectives for the investigation related to assessing the N&E of contamination in the subsurface might include one or more of the following:

- determining whether leaching of contamination from soil to groundwater may have occurred

- estimating average soil concentrations by depth interval(s)

- estimating the volume of contaminated soil that may need to be removed or properly managed

Ideally, the nature and quality of ISM subsurface samples should be similar to those collected for more easily accessible surface soils, and in a manner that allows every possible increment in the DU an equal likelihood of being collected. Sampling theory also indicates that the entire cross-section of the DU be sampled in each increment making up the ISM sample, but in practice, the combined mass of the increments from a large number of borings would likely result in an impractical sample volume. Therefore, field subsampling plans may be needed to achieve sampling objectives.

Sampling approaches for subsurface soils differ from those applied to surface soils because access to the subsurface is more difficult. It is not uncommon to design an ISM sampling approach for subsurface soils that has less increments than are used in the respective surface investigation, but this does not mean that low-quality data are generated for these subsurface samples. Adequate data can be generated with fewer increments in subsurface sampling when geological heterogeneity and the end use of the data are understood, and this should be addressed during the planning process. Moreover, potential limitations of the data should be clearly discussed, and the implications regarding uncertainty in mean soil concentrations should be taken into account in risk management decisions. Section 4 goes into further detail on sampling techniques for subsurface soils. Example of subsurface sampling designs are provided in Section 3.1.6.

Evaluating the lateral extent of sediment contamination with ISM. When existing ISM data indicate high concentrations of contaminants are locally elevated in soil or sediment, such data may be sufficient to establish the boundaries of a source area. However, in other situations, it may be necessary to refine the study goals and redefine the number and boundaries of DUs based on information from additional sampling.

An example of applying ISM to address data needs pertains to the evaluation of trends in contaminant concentrations as a function of lateral distance. Contiguous ISM SUs along a drainage can provide sound information on contaminant concentration trends and also provide information on average concentrations on the scale of one or more SUs. In some situations, designation and testing of anticipated clean boundary DUs around anticipated areas of heavy contamination can help to minimize the need for remobilization. Examples of an ISM application to evaluate the lateral extent of contamination is provided in Section 3.1.6.

Evaluating the potential presence of subareas of elevated contaminant concentrations with ISM. Historically, discrete soil sample results with concentrations above an AL have often been assumed to represent a significant volume of surrounding soil containing sufficiently high concentrations of contaminant to warrant concern. The concentrations in these assumed volumes have been considered to represent source areas, which are defined in various ways by different regulatory bodies (ITRC 2008). This range of definitions can lead to a wide range of interpretations and has typically led to additional sampling events to further define the N&E as parties struggle to determine what qualifies as an area of elevated concentration versus a source area. It is highly recommended that project teams include their state regulators early in the planning process and that all stakeholders agree upon the basis for defining and distinguishing elevated concentrations from source areas.

One reason why ISM uses so many increments (a minimum of 30) is to have sufficiently dense spatial coverage of the DU. This spatial density improves the chance that the field sample will include significant areas of elevated concentration in the same proportions as present across the DU. An important ISM principle is that DUs should not be designed in a way that results in dilution of significant volumes of highly contaminated soil from smaller areas. The location and size of source areas can often be established or hypothesized based upon site history, including waste disposal units, locations of known or suspected spills or releases, and volumes of soil shown by previous sampling to have significant contaminant concentrations relative to the surrounding soil. In other cases, the presence of subareas of soil with relatively high concentrations is suspected, but the locations are uncertain.

A DQO study goal could be to find significant small areas (horizontal and depth) of elevated contaminant concentration(s) above risk-based concentration(s) or an AL within a DU. The DU could be comprised of several SUs designed to meet the “small area” volume requirement. It is in the systematic planning phase that project teams must define and designate what concentration and what volume, surface area, or mass are significant to their decision-making. To define the size and concentration of a significant small area of elevated contamination, they can use an Excel spreadsheet tool, if the critical condition of a mature CSM is met. For an example and more details on this concept, link to White Paper (Crumbling 2014).

| Statistically based sampling designs can be developed to determine whether localized areas of higher soil concentrations exist, even if the locations of such subareas within a larger site are unknown. |

The spacing of increments (and thus the number of increments needed to fill the DU’s area) can be set to have a desired statistical probability of increments being collected from within an area of defined size for incorporation into the field sample. In this case, if the size of a potential subarea of elevated concentrations is specified, sampling can be conducted to determine whether one or more such areas exist within a DU with an objective degree of confidence and scientific defensibility.

A free software program developed by Pacific Northwest National Laboratory (PNNL) called Visual Sample Plan (VSP) is available to determine the increment spacing for the DU grid so as not to miss sampling from a significant small area of elevated concentrations within the DU (VSP 2019). VSP has varied statistical sample size designs built in to support sample collection using ISM. The designs are grouped into two general categories – estimating the mean and detecting elevated regions. Both designs are built with standard statistical sample size design principles – namely, the stakeholders must specify desired Type I and Type II errors and provide estimates for standard deviations associated with the sampling process as well as regulatory thresholds to which the sample values will be compared. VSP does not implement any of Pitard or Gy’s equations, although it similarly attacks the goal of accurately estimating concentration levels in soil. A validation study of VSP ISM sampling design for elevated regions at a military training range demonstrated reliable estimates of mean concentrations and corroborated spatial areas with statistically elevated concentrations within the DU for 2,4-dinitrotoluene (2,4-DNT) (USEPA, 2015).

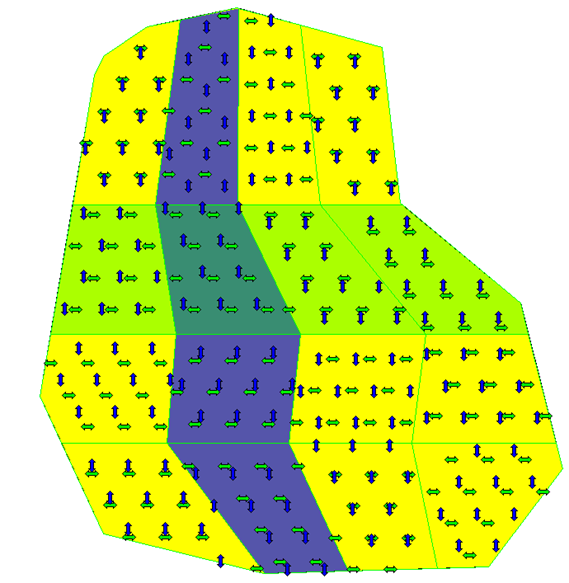

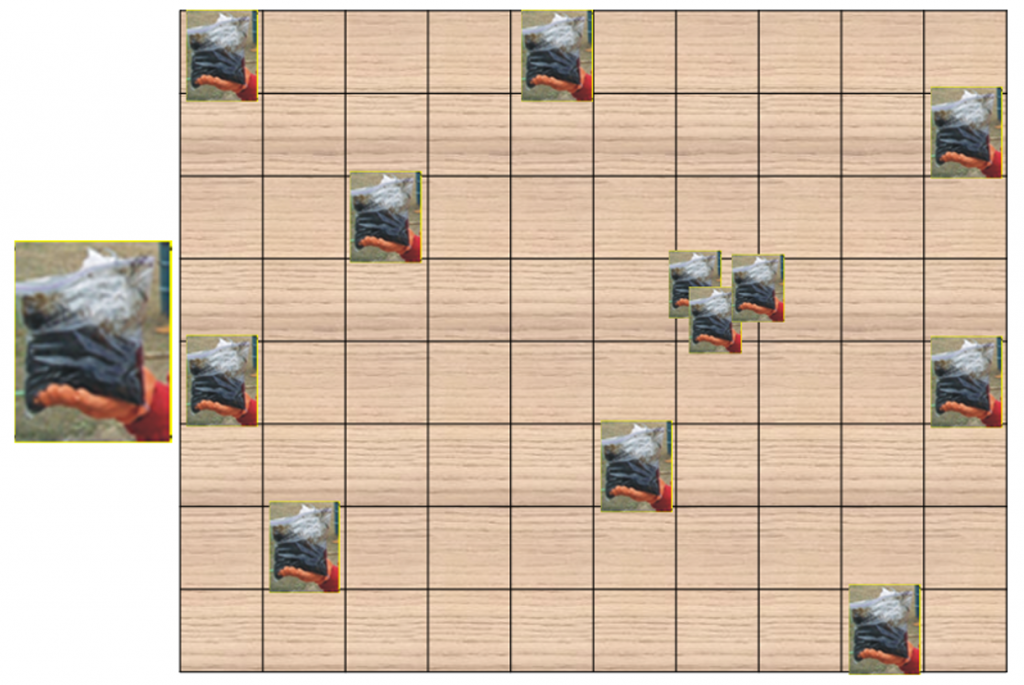

VSP’s elevated regions module sampling pattern and design differs from the typical ISM sampling pattern and design described within this document and presented in the examples in both Section 3.1.6 and the case studies in Appendix A. VSP’s elevated regions employ a pattern of rows and columns to design increments for an ISM sample in such a way that they can be combined into ISM samples but still used to spatially locate areas of high contamination. Figure 3-3 depicts a VSP 4 x 4 ISM row-column design with 16 cells. VSP can calculate either the number of incremental samples to achieve a desired power of detecting contamination above a specified level or the probability of detecting an elevated concentration, given a specified number of increment samples.

Source: VSP help file, https://vsp.pnnl.gov/help/.

As with any statistical tool, there are important assumptions and limitations for the user and project team to consider:

- Users must understand the assumptions of the statistical models used in VSP.

- The closer the analyte’s actual data distribution and variability agree with the assumptions of the underlying statistical model, the more accurate VSP’s output will be.

- Even when inputs to statistical calculations are reliable, the numerical outputs of statistical calculations are still imperfect estimates of field concentrations, receptor exposures, and cleanup volumes.

Moreover, there are caveats specific to VSP:

- The user must upload a map of the area (DU) or depict a sampling area (DU) first to enable the ISM Elevated Regions module within the Locate Hot Spots part of a Sampling Goal.

- For very complex shaped sample areas, the site division algorithm does not work well.

- The grids for cells can be square, rectangular, or triangular.

- The user is required to have data on or make a conservative assumption regarding the SD within the small area of elevated concentration and the SD within the remaining IA in the DU. If comparable studies with variance estimates are not available, a pilot study may be needed, which will affect cost. If assumptions on the variance are too conservative, unnecessary costs may be incurred.

- The VSP elevated regions module sampling pattern and design differs from and is more costly than the typical ISM sampling pattern and design, but it provides specific levels of confidence in detecting small areas with significantly elevated concentrations.

- For ISM designs to estimate the mean, VSP does allow the user to input the costs associated with the sample collection and measurement. The costs input are utilized by VSP to propose the most cost-efficient way to aggregate the increments from the DU into the ISM samples with a predicted level of confidence in locating elevated regions in the DU.

During systematic planning, the project team must ensure their study site meets the assumptions and that they have weighed the limitations and caveats for VSP against the study goals. For more details on this concept, see the White Paper (Crumbling 2014). Users are strongly encouraged to fully understand and consult the additional details on VSP designs plus the inherent assumptions and limitations that are available in the VSP help files (https://vsp.pnnl.gov/help/). VSP help for the ISM elevated regions module are under the Sampling Goal menu, Locate Hot Spots, Locate Hot Spots Using MI Samples.

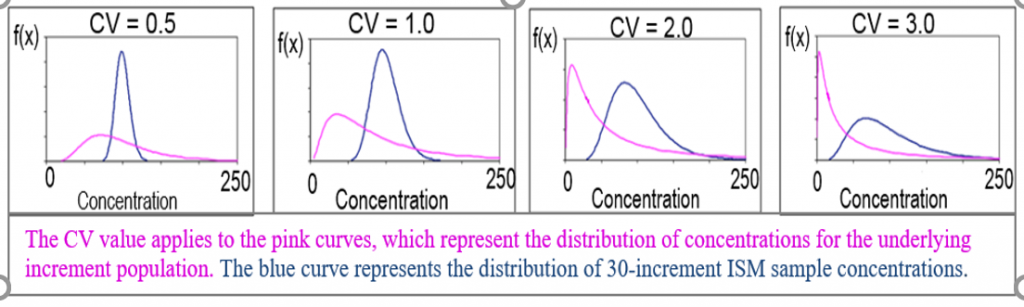

Another approach, but one that lacks the statistical rigor of a defined statistical probability of increments being collected from within an area of defined size, would be to increase the number of increments and thereby the spatial coverage in the DU, to improve the chance that the sample will include significant areas of elevated concentration in the same proportions as present across the DU. A large relative SD (RSD) among replicates can be used as an indication that a small area of elevated concentration in the DU was sampled in one replicate but not in another. This condition might trigger additional investigation with more replicates from the DU, more increments in the DU, or subdividing the DU into multiple smaller SUs. (See Section 3.2.4.2 text and Table 3-2, which classifies heterogeneity of increments in terms of low, medium, and high coefficient of variation [CV] of replicates.)

Effective detection and delineation of areas of elevated concentrations in heterogeneous soil matrices is a challenge. To avoid the pitfalls of “chasing” areas of elevated concentration, ISM practitioners are encouraged to define an area or volume of concern as part of the SPP. Similarly, the planning team is encouraged to define decision rules related to the assessment of the data acquired. An example of such an ISM application is provided in Section 3.1.6.

Use of field screening methods with ISM. Field screening methods can sometimes be used in conjunction with ISM to expedite evaluation of the N&E of soil or sediment contamination. ITRC provides guidance for the selection and use of field site characterization tools to support development of a CSM, plan for the collection of samples for laboratory analysis, and provide input for considering remedial strategies (ITRC 2019). Field portable XRF and gas chromatography are techniques that can be used to gain an understanding of the N&E of contamination and help define the boundaries of SUs or DUs. “EPA Test Method 6200” (USEPA 2007) provides guidance for the use of field portable XRF spectrometry for determining metals concentrations in soil and sediments. Although the guide was written in 2007 and considers the best available technology at that time, its recommendations are valid and still employed in present-day publications and studies. Field portable gas chromatography can be used to evaluate soil and sediment concentrations of organic chemicals, particularly volatile compounds.

3.1.5.3 Laboratory processing of ISM soil and sediment samples

The manner in which soil and sediment samples are processed can affect measured contaminant concentrations in these samples and whether the concentrations are consistent with the assumptions underlying human and ecological exposure models. During the planning process, the project team should consider the physical and chemical characteristics of suspected contamination and the end use of the data to choose the most appropriate sample processing options. There are four issues and related questions that the project team should consider during planning:

- moisture management (Is air-drying of the samples acceptable?)

- particle size selection (Should the samples be sieved or otherwise processed to exclude particles larger than a specified diameter?)

- particle size reduction (Should the samples be ground prior to analysis?)

- sample digestion/extraction (Should the mineral matrix of the sample be dissolved, or should digestion/extraction target the contaminants adsorbed in soil particles or otherwise present in soil?)

The specific analytes that are the focus of the investigation can influence sample processing decisions because there can be a wide range of physical and chemical characteristics within analyte groups. Some characteristics that can influence the selection of sample processing options include boiling point, volatility, air reactivity, and sorption characteristics. The presence of high-concentration nuggets of contamination can also influence sample processing decisions. Section 5.2 provides detailed guidance on selecting sample processing options.

3.1.5.4 Considerations for determining the number of increments and sample mass

As covered in Section 2.5 and Section 2.6, the number of increments collected for an ISM sample and the total mass of the sample are the main factors controlling the representativeness of an ISM soil sample, where representativeness is the measure of how well the sample represents the entire mass of soil within an SU or DU.

Section 2.5 and Section 2.6 should be reviewed to understand the basis for selecting the number of increments for a given sample and the target mass of the ISM sample. Collection and analysis of a large sample mass helps to control what is referred to as CH or FE, which refers to the differences in contaminant concentration related to the physical or chemical characteristics of different soil particles. A large number of increments helps to control distributional heterogeneity, which refers to differences in contaminant concentrations due to the large-scale spatial distribution of contamination within the SU or DU.

The selection of the number of increments and sample mass is dictated by the anticipated degree of small- and large-scale heterogeneity, which might be influenced by the distribution of pockets of contamination across a DU, by contaminant chemical characteristics, by soil type and physical characteristics, and by the contaminant release mechanism.

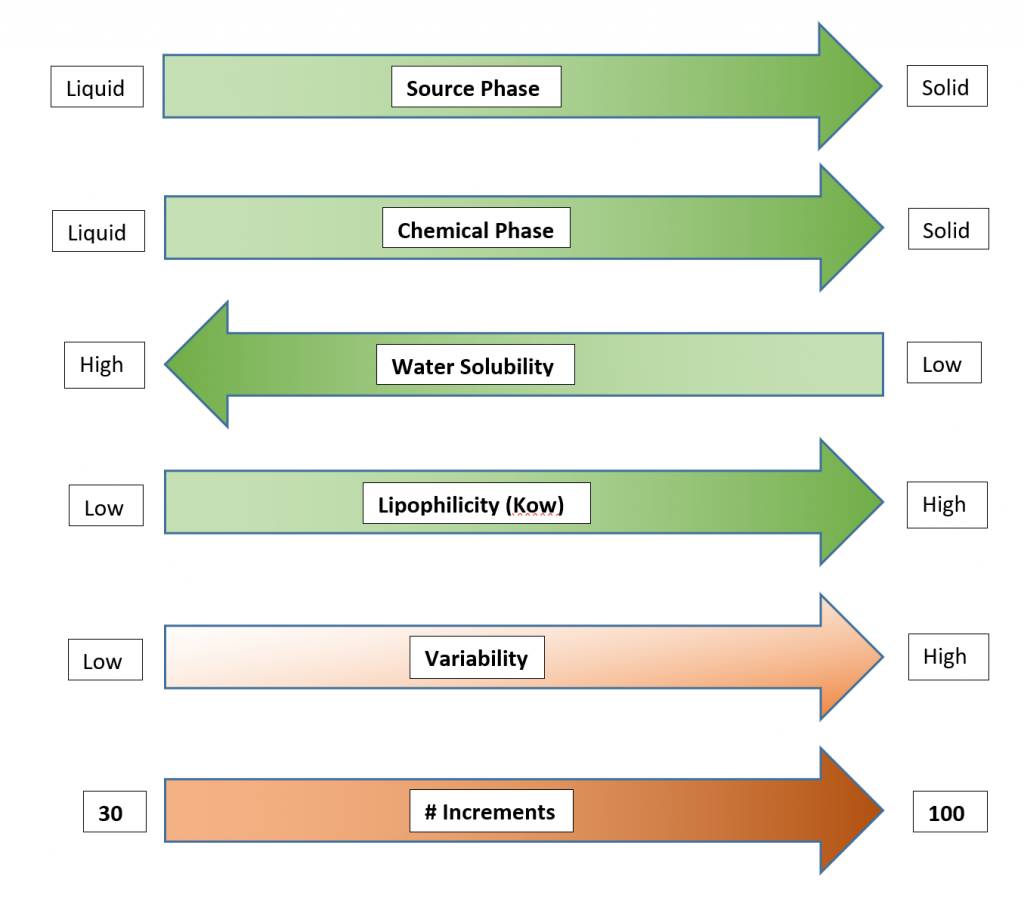

| It is generally accepted that between 30 and 100 increments is appropriate for many applications, with a larger number of increments being driven by a larger degree of distributional heterogeneity. |

Figure 3-4 presents various factors to consider in deciding on the number of increments to collect from a DU and their influence on heterogeneity. The graphic illustrates the influence of various physical and chemical factors – such as chemical properties, and whether a release is associated with the solid or liquid phase of soil – on potential variability and the related association of each variable to the number of increments to help control heterogeneity.

Source: ITRC ISM Update Team, 2020.

Collection of a field sampling mass greater than 1 kg is recommended. Final ISM field samples typically weigh 500 g to 2,500 g, and as discussed in Section 2.5.3.1, many laboratories will limit soil or sediment sample mass to about 2 g to 3 kg. In general, individual soil increments typically weigh 20 g to 60 g. Based on the target final mass of the ISM field sample and the number of increments specified to control distributional heterogeneity, the minimum mass of the individual increments can be calculated (see equation in Section 4.2.3). The mass of any single increment depends on the depth of interest, soil density, moisture content, and the diameter or size of the sample collection tool. In addition to the function of controlling CH, the mass of the final ISM sample must also be sufficient for the planned analyses, any additional QC requirements, and possible repeat analyses due to unanticipated field, laboratory, and/or QC failures. Note that sieving of soil samples at a specified particle size reduces the amount of soil mass available for preparation and analysis, although as discussed in Section 2.6.2.1, such sieving will also tend to reduce CH.

3.1.5.5 Common sampling designs used with ISM

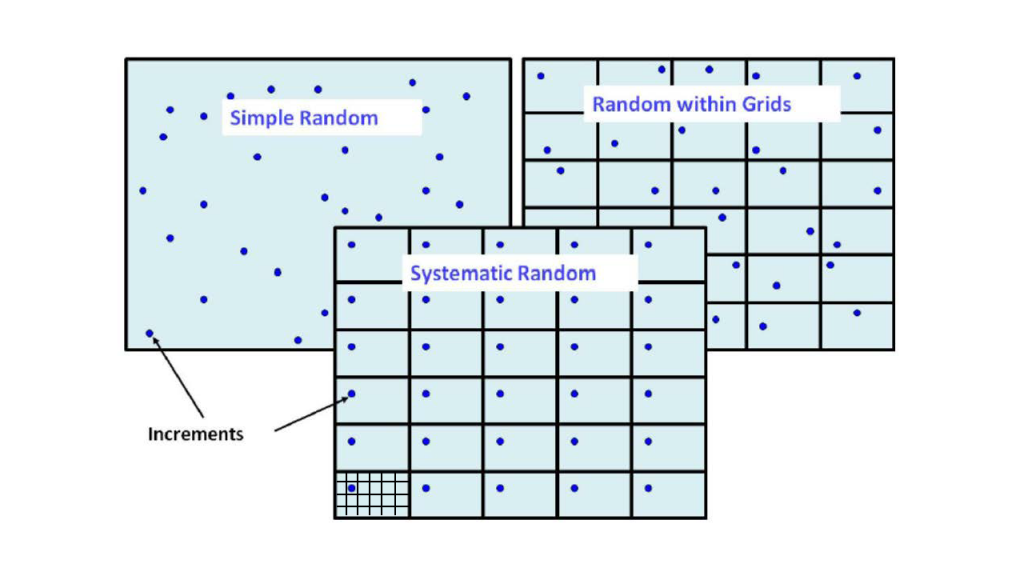

Planning and design for ISM shares many of the characteristics common to other types of environmental soil sampling. Among the common types of statistically based sampling designs are simple random sampling, stratified random sampling, and systematic random sampling. The element of randomness common to these designs allows statistical inferences to be made about the sampled population, as well as a defensible calculation of average contaminant concentrations within a DU. Implementation of these types of sampling designs, along with the basis for selecting among them, is discussed in (USEPA 2002e).

Examples of simple random sampling, stratified random sampling, and systematic random sampling are shown in Figure 3-5. In the case of stratified random sampling, the strata are shown as regular grids, thus the sampling design is labeled “Random within Grids.” For systematic random sampling, rather than selecting a random location for each grid cell within a DU, randomization is performed only once, and the randomly selected location within a cell is then applied to all other cells. This systematic random sampling design is also shown in Appendix A in Case Study 9, which contains a WP with exceptional articulation of the systematic random placement of increments. For further discussion ITRC 2012.

Source: ITRC ISM Update Team, 2020.

Up to this point, this section has provided a summary of the key aspects of systematic planning and DU design in relation to the collection of soil and sediment samples. Section 3.1.6 provides three examples that illustrate these important concepts in different situations.

3.1.6 Examples illustrating planning and design for ISM

The reader will notice that the three examples described here differ in how they were conceptualized and developed. They are presented to illustrate a range of situations and approaches, and to help the reader realize that while thoughtful planning is always necessary, there is no precise formula for how to evaluate a site. Each example illustrates a different application, interpretation, and development of a sampling plan. As discussed in Section 3.1.1, steps 1 through 4 of USEPA’s DQO process have been applied to help structure the discussion of systematic planning and to organize these examples. However, some material associated with later steps of the DQO process (particularly step 7, sampling design) is necessarily integrated in these three examples:

- an agricultural field, settling pond, and drainage swale (Example 1)

- former agricultural field and establishing exposure DUs (Example 2)

- former industrial facility that is to be redeveloped (Example 3)

3.1.6.1 Example 1: agricultural field, settling pond, and drainage swale

Four different ISM topics will be addressed through this example set:

- estimating average concentrations in a defined volume of soil or sediment

- evaluating the vertical profile of contamination in soil or sediment

- evaluating the horizontal extent of contamination along a drainage

- estimating average concentrations in stockpiled material for waste management decisions

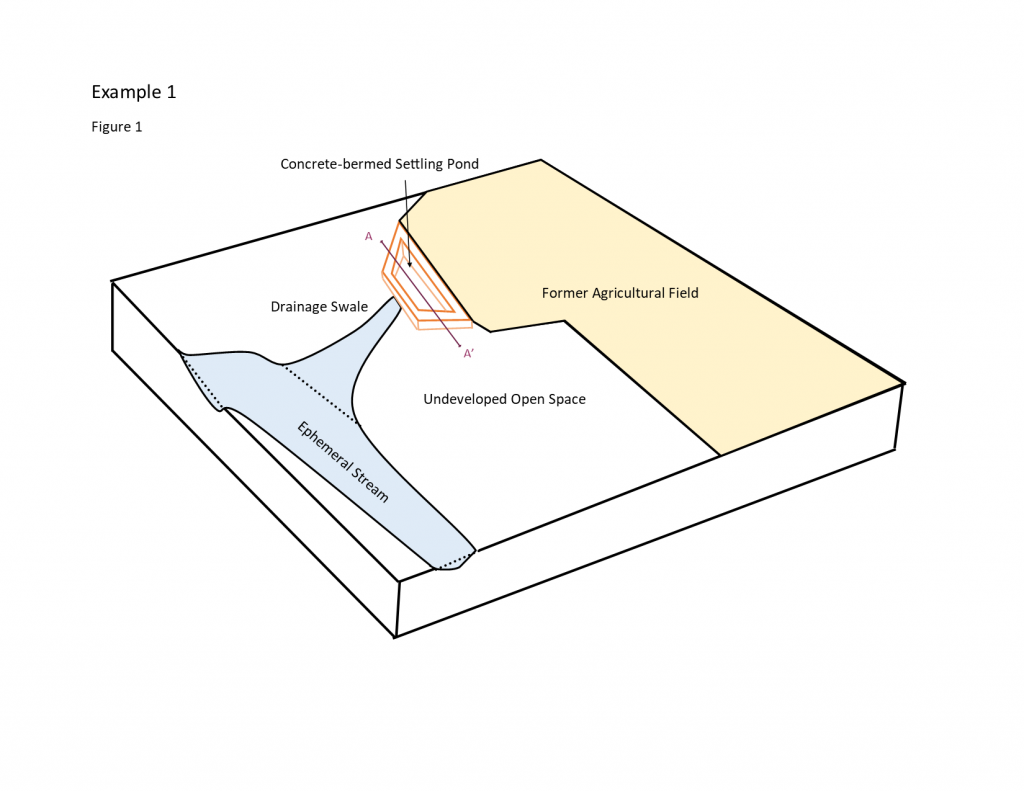

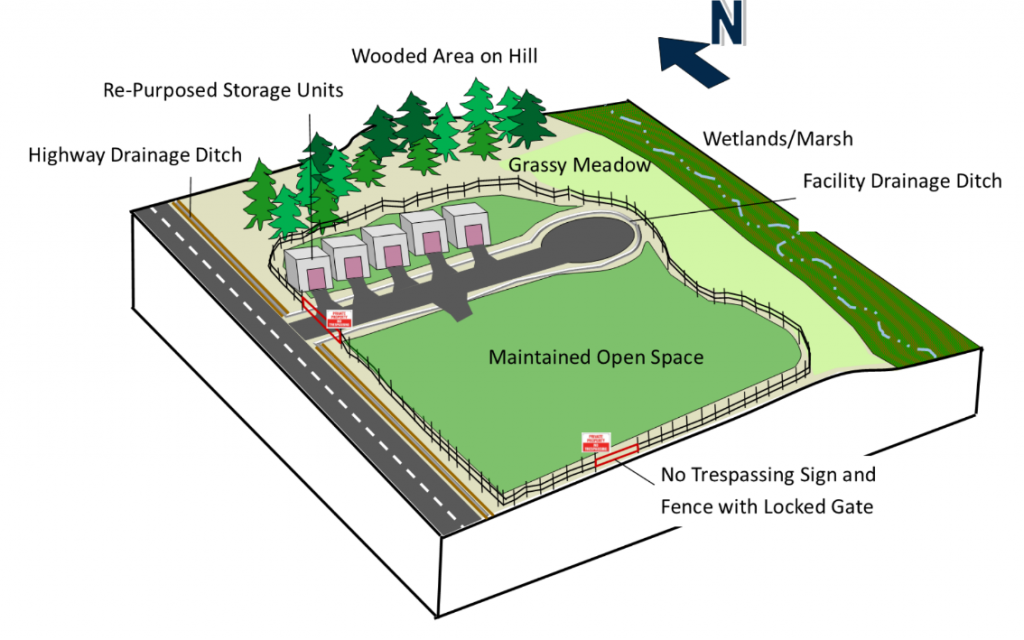

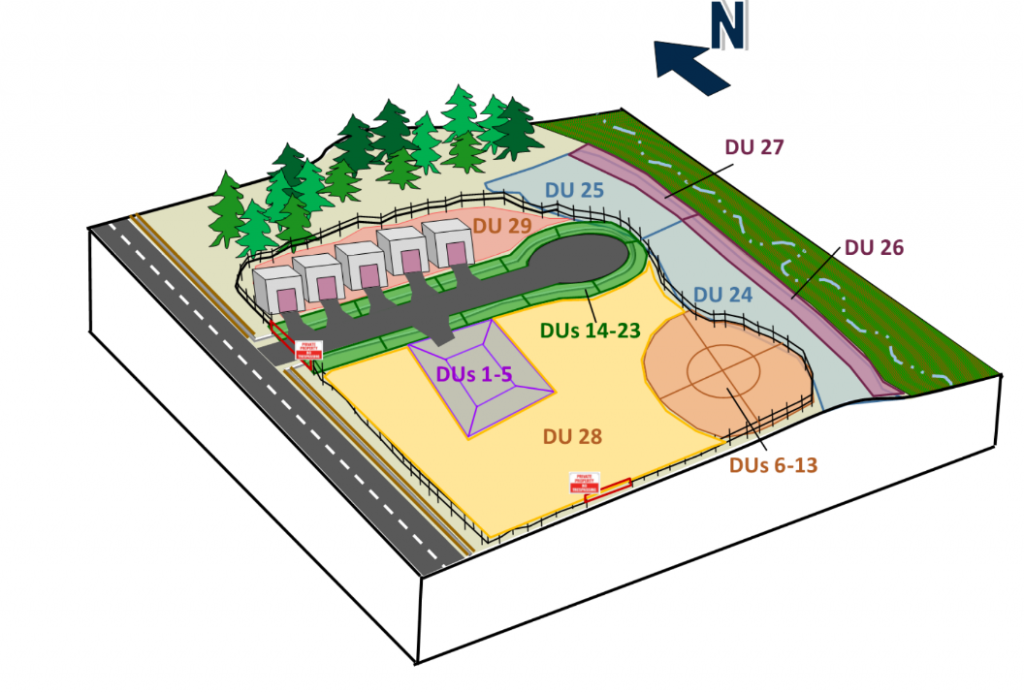

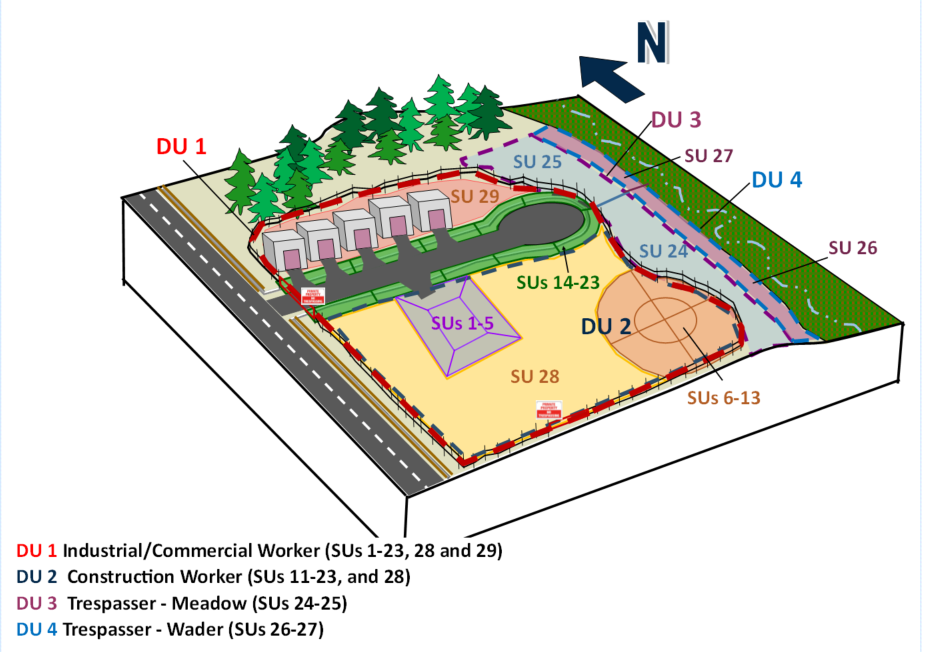

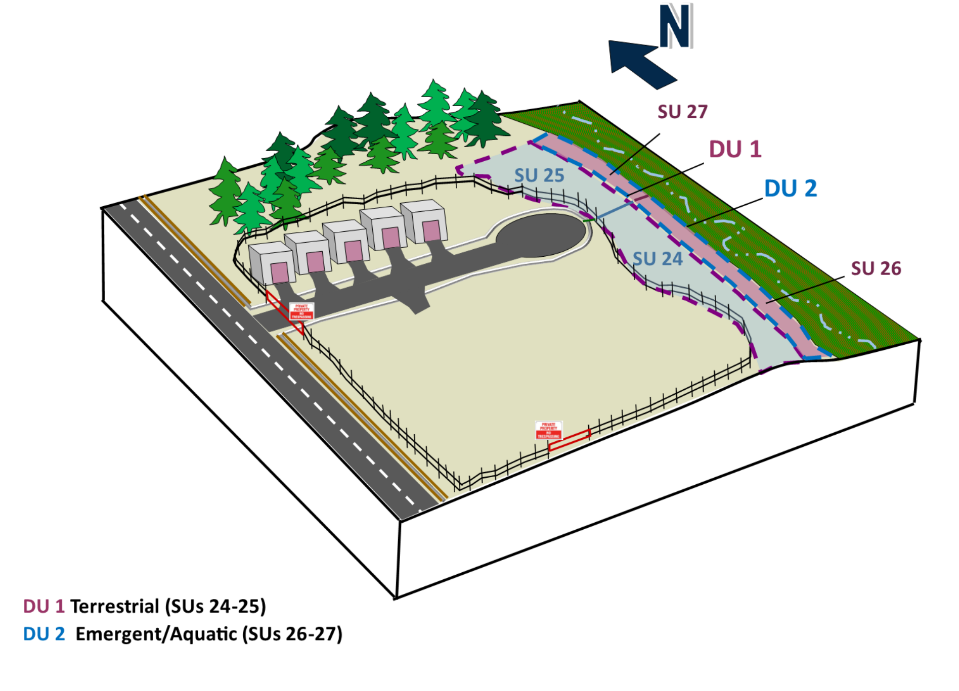

CSM. A bermed enclosure with a cement floor was used for holding irrigation water runoff for a large agricultural field that had not been actively farmed for decades. Water was supplied as flood irrigation to the field, and on occasions when excess irrigation water was applied, the runoff was captured in a 1-acre holding pond situated at a slightly lower elevation than the field. Organochlorine pesticides (OCPs) were historically used on the field, and soil samples from the field indicate that concentrations of several OCPs are above state risk-based soil screening criteria. The farmers note that there is about 6 ft of sediment that has accumulated in the settling pond, and also that the rates of pesticide application had increased over time when the field was being used, such that the more-recent deposits might have the highest concentrations of OCPs. Furthermore, the farmers point out a notch in one of the berms, on the other side of which is a cement apron that leads to a shallow swale. The swale has a gentle gradient and broadens as it leads toward an ephemeral stream that is about a half-mile away. The excess irrigation water reportedly rarely overtopped the berm, but there is little confidence in that observation (see Figure 3-6).

Source: ITRC ISM Update Team, 2020.

Problem formulation. The problem is defined as determining whether sediment concentrations in the settling pond, as well as the swale, could potentially present unacceptable risks to individuals who might currently access the area or to people in the future should the land be repurposed for residential or commercial uses.

Study questions. An initial question (study question 1) is posed as, “Does OCP sediment contamination present unacceptable risk under a residential scenario?”

This question reflects the understanding that residential land use is protective of any other exposure scenario. During the SPP, state soil screening criteria for OCPs are identified as inputs to this question. It is accepted that lateral patterns in OCP sediment concentrations are unlikely within the settling pond, due to the manner in which contamination was deposited, but the CSM’s prediction that the contamination decreases with depth to the cement floor of the settling pond should be confirmed with data. It is further assumed that, because the sediment pond received field runoff directly, OCP concentrations in pond sediments must necessarily be greater than those in the swale.

A second question (study question 2) is therefore posed as, “Are OCP sediment concentrations decreasing with depth in the settling pond?”

Decision rules and sample design for study questions 1 and 2. The first two study questions pertain to OCP sediment concentrations in the settling pond. From these two questions, a decision rule is developed applying the premise that the highest OCP concentrations will be found in the settling pond:

If average OCP sediment concentrations are below residential soil screening criteria in the surface interval, and concentrations are decreasing with depth, then take no further action, else characterize OCP contamination in the swale.

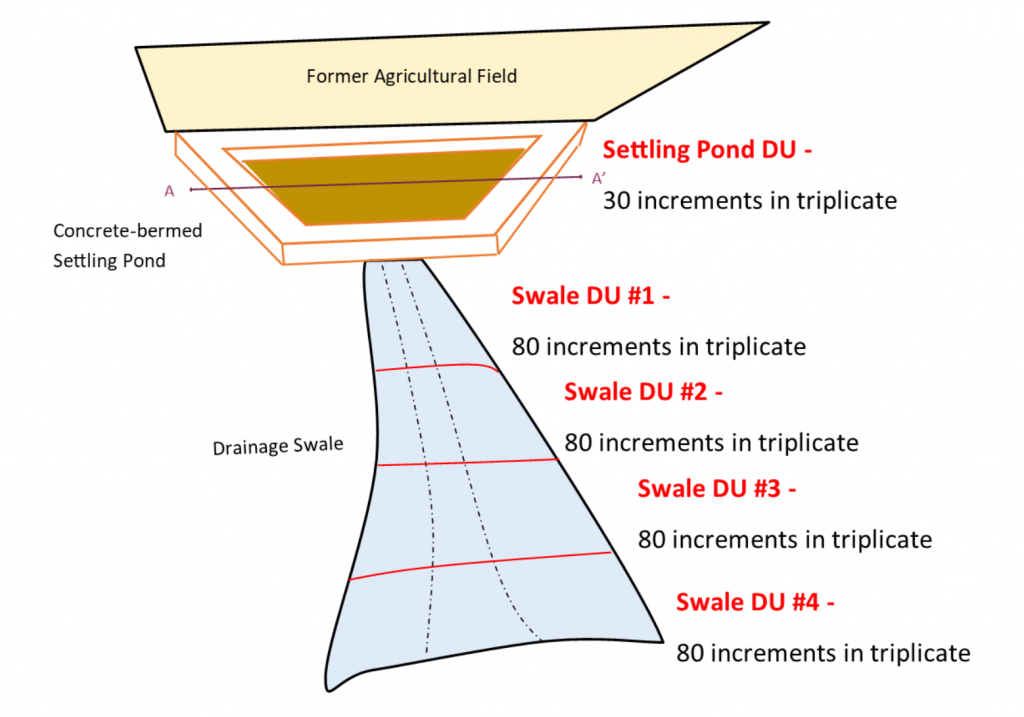

The lateral dimension of the DU area for study questions 1 and 2 is defined as the entire 1-acre surface area of the settling pond within the berms because, as noted in relation to study question 1, systematic patterns in OCP sediment concentrations within a depth stratum are unlikely within the settling pond. For study question 1, a surface sediment interval of 0 to 6 in, where OCP concentrations are expected to be highest based on the CSM, is defined. Because lateral heterogeneity is anticipated to be low, a value of 30 increments is selected from within the recommended range of increments (30 to 100) for the surface soil layer. Three replicates are proposed for the surface interval to support estimation of uncertainty in average OCP sediment concentrations (see Figure 3-7).

Source: ITRC ISM Update Team, 2020.

To address study question 2, the remaining depth of sediment (approximately 6 ft) is divided into three depth intervals of approximately 1 to 2 ft each. Although ideally 30 increments and three replicate samples would be collected from the deeper intervals, such as were obtained for the surface interval, the project team decides to phase the depth sampling because of the cost of sampling and the expectation based on the CSM that OCP concentrations at depth are likely to be low and relatively homogenous. Ten corings are proposed to obtain 10 core increments from each of three subsurface intervals corresponding to the approximate 6-ft sediment depth in the settling pond (0.5-1.5 ft, 1.5-3 ft, and 3-5 ft), with no replicates. Figure 3-8 depicts DUs pertaining to subsurface sampling, where DU-1, DU-2, and DU-3 are applicable to Example 1. A 1-kg sample is identified for collection as a plug subsamples from each of the three depth increment (see Section 4.5.1), resulting in a 10-kg sample mass for each subsurface interval. Because laboratories typically limit sample mass to a few kg, field subsampling (per discussion in Section 5.3.5) is proposed for the 10-kg samples to prepare a final 2-kg sample for shipping to the analytical laboratory. A second decision rule is developed specific to study question 2:

If OCP sediment concentrations in a depth interval are clearly below residential soil screening criteria, then take no further action, else consider either additional sampling to refine the estimate of average OCP concentrations (if concentrations are close to criteria) or remedial action (if concentrations are far above criteria).

When the settling pond analytical data for OCPs are received and evaluated, two key findings emerge. First, it is clear that OCP concentrations in all depth intervals exceed both residential and industrial state soil screening criteria. Also, there is relatively high variability among the three replicate samples of the surface sample interval, meaning the assumption of relatively homogeneous contamination seems to be incorrect. Based on the magnitude of the screening level exceedances, it was determined that proceeding with this relatively large degree of data variability was unlikely to result in decision errors, and that the data were sufficient to proceed to consideration of remedial action in the settling pond without further sampling (see study question 4 below).

Source: ITRC ISM-1 Team, 2012.

Decision rule and sample design for study question 3. Consistent with the decision rule for study questions 1 and 2, a design is developed to evaluate OCP contamination in the swale. The swale is divided longitudinally into 500-ft intervals between the settling pond and the ephemeral stream. As the swale broadens with distance from the pond, the areas of these swale segments also increase with distance: 5,500 ft2, 8,000 ft2, 15,000 ft2, 19,000 ft2, and so on. There is no visual indication of channeling or deposition within the swale. The range of surface areas in the first four swale segments, from about one-eighth to one-half-acre, are sized to fall within the range of areas applicable to both human and ecological exposure scenarios related to state soil screening criteria. Because there is no visual evidence of preferential areas of sediment deposition in the swale, and because the areas of the swale segments are within the range of potential exposure areas, there is minimal concern that there could be subareas of higher concentrations or hot spots within a swale segment. Therefore, contingencies for defining smaller DUs based on data evaluation are not proposed. The residential soil screening criteria applied for the decision rule for study questions 1 and 2 are also applied to the swale segments, since they are determined to be protective of potential ecological impacts.

A third study question (study question 3) is developed: “Do average OCP sediment concentrations in the swale present unacceptable human or ecological risk, and if so, has the lateral extent of contamination relative to such concentrations been established?” From this question, the following decision rule is developed:

If OCP sediment concentrations are decreasing with distance from the settling pond, and average OCP concentrations are below residential soil screening criteria, then take no further action in the swale, else consider additional sampling (to determine extent) and/or site-specific risk assessment or remedial action.

Each of the first four swale segments are defined as DUs. A sediment depth interval of 0 to 12 in is defined for sampling, based on a field survey that showed roughly this thickness of fine-grained material (similar to agricultural field soil) is present within the swale. Because heterogeneity of OCP concentrations in swale sediments is unknown, and given higher than anticipated heterogeneity in settling pond sediments, a value of 80 increments is selected from within the recommended range of increments (30 to 100). Three replicates are proposed for all four segments.

When the swale segment analytical data for OCPs are received and evaluated, OCPs are detected sporadically and only in the first two segments. The average concentrations of OCPs in these segments are below both residential and ecological screening criteria, so consistent with the decision rule for study question 3, no further action is proposed for the swale.

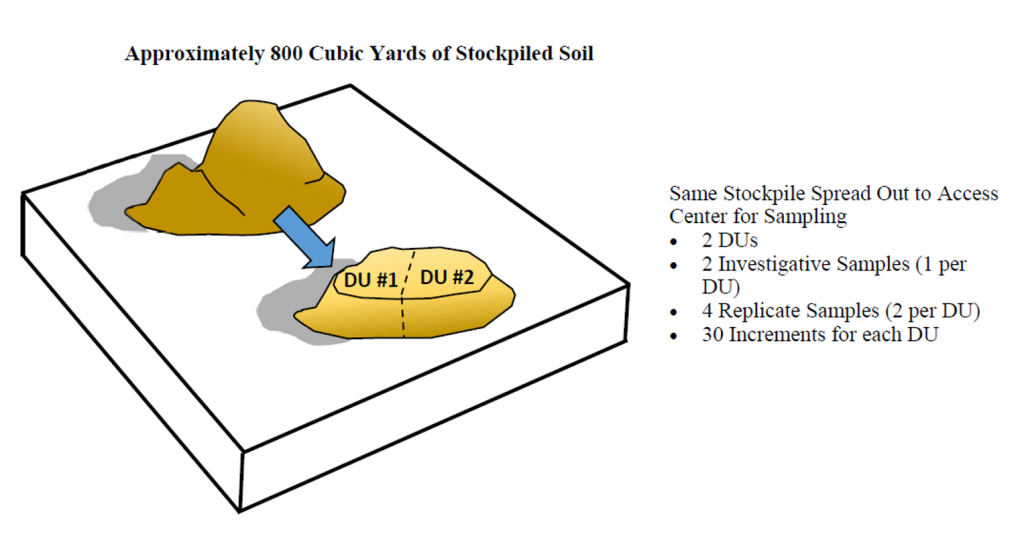

Decision rule and sample design for study question 4. As discussed, average OCP concentrations in all depth intervals of the settling pond exceed screening criteria by a relatively large margin, and evaluation of the three replicate data for the surface interval indicates that there is a high degree of variability in OCP sediment concentrations. Rather than continue in situ sampling, informal cost-benefit consideration suggests that it is advisable to excavate settling pond sediments and dispose of them in an appropriate facility. The OCP concentrations are near levels that differentiate between two disposal facility options with very different disposal costs. An excavation and stockpiling plan is developed to remove sediments by depth and stage them in a long and narrow stockpile that is arranged on the long axis from shallower to deeper sediments, since the analytical data indicate an inverse relationship between OCP concentration and depth.

A fourth study question (study question 4) is developed: “Are average OCP concentrations in segments of the stockpile above the acceptance criteria of the lower-priced landfill?” From this question, the following decision rule is developed:

If average OCP sediment concentrations in a stockpile segment are above the acceptance criteria of the lower-priced landfill, then send the material to the higher-priced landfill, else ship to the lower-priced one.

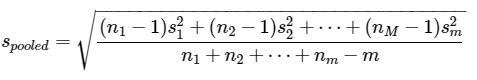

The volume of an individual stockpile segment, defined as a stockpile DU, is determined by transportation costs and minimal disposal quantity rules for the hazardous waste landfill. The stockpile is laid out with a depth of 2 ft to allow for cost-effective hand coring. Because heterogeneity is known to be high, and sampling costs are low, a value of 100 increments per segment is selected from within the recommended range of increments (30 to 100). Three replicates are proposed for all segments to support an estimate of a 95% UCL on mean OCP concentrations.

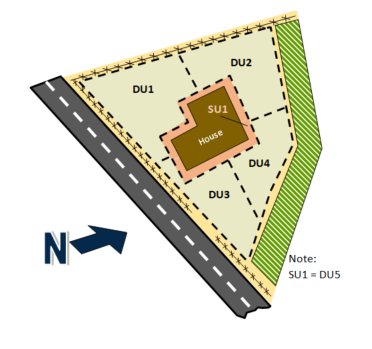

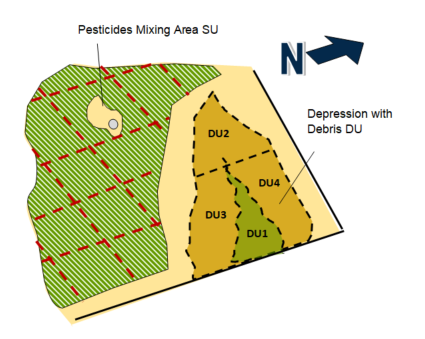

3.1.6.2 Example 2: former agricultural field and establishing exposure DUs

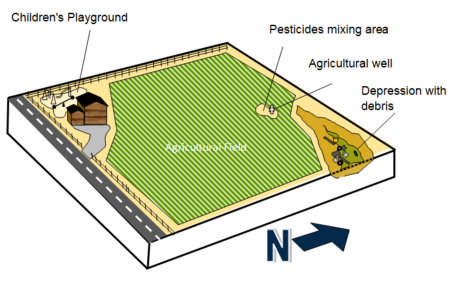

Example 2 focuses on developing and delineating EUs for human health risk-based study questions and will guide you through the development of ISM sampling plans with successively more complex site CSMs. Throughout Example 2, the risk-based study questions focus on current and potential future residential land use with no ecological receptors. The DU size is ¼ acre, the assumed size of a future residential lot. Residential lot sizes vary, thus planning with a regulatory authority and their risk assessor is essential.

Example 2A covers four concepts:

- establishing replicate heterogeneity limits in the DQOs as an MQO in Specific Study Goal data needs

- assessing the assumption of homogeneous contaminant distribution (low heterogeneity) by defining as RSD of 20% in a Decision Rule.

- extrapolating to unsampled DUs within a large study area

- designing background DUs

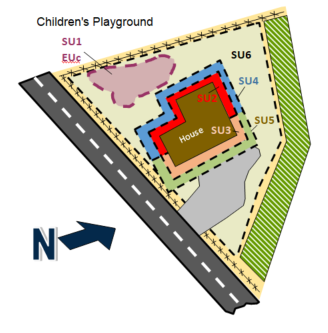

Example 2B covers three additional concepts:

- Designing source area N&E DUs within EUs

- Designing SUs within DUs (for example, a children’s play area within an adult residential DU)

- Designing for weighted averaging of 95% UCL

The problem formation (DQO step 1) is similar for both Examples 2A and 2B: determine the average concentrations of COPCs in surface soil to assess if potential risks are unacceptable to current and/or future residents. (Note that Example 1 provides guidance on subsurface sampling. Care should be taken to plan for the number of subsurface increments needed to obtain reliable concentration estimates with minimal uncertainty, like surface soil ISM sampling, for use in estimating potential risks.)

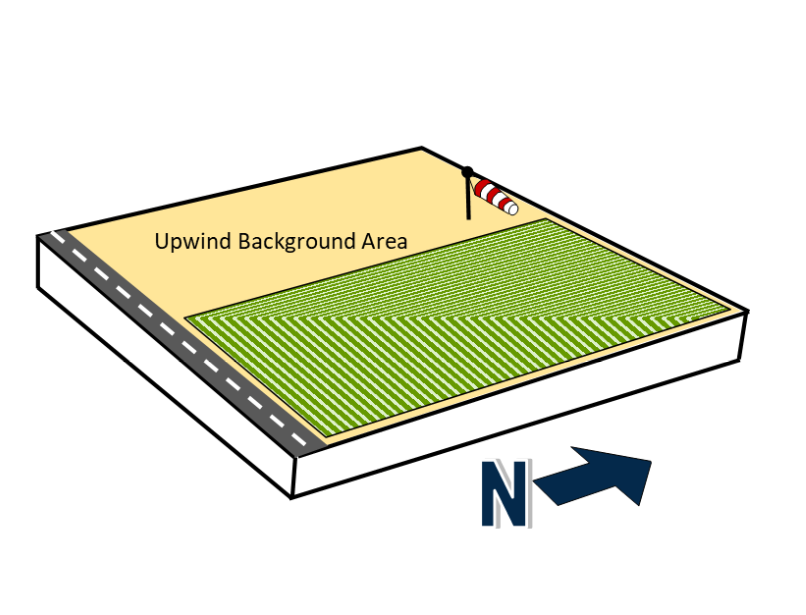

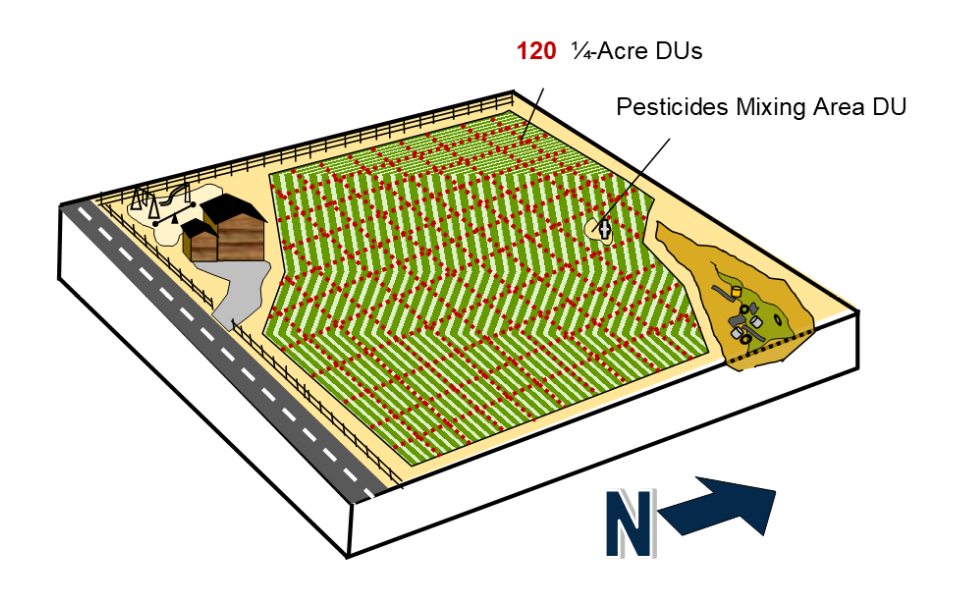

Example 2A. The CSM for Example 2A (Figure 3-9a) is a 30-acre agricultural use area that has been farmed since the early 1900s. Legal broadcast application of OCPs and arsenical pesticides, including lead arsenate, is the only suspected potential source of soil contamination and is limited to surface soil contamination with no migration of COPCs to the subsurface. The topography is flat, except for furrows between rows of plants. No localized areas of potentially heavy contamination were identified in a thorough Phase I Environmental Site Assessment (ESA). Moreover, county records indicate that, in recent years, there has been no use of triazine herbicides, carbamates, or organophosphate pesticides. There are no known or suspected pesticide mixing areas, and no existing structures or historical aerial photographs show any evidence of structures dating back to the 1920s. The site is surrounded by agricultural fields, except an area to the west that has never been farmed or had any other known uses based on historical photographs and county records. The site is scheduled to undergo residential development.

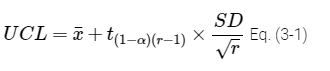

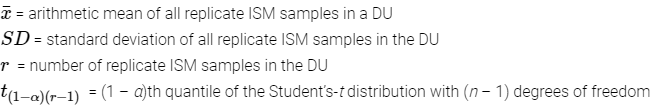

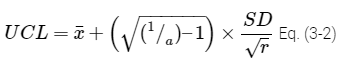

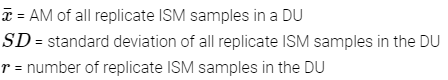

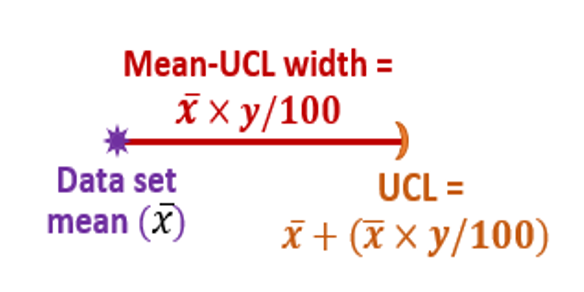

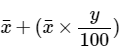

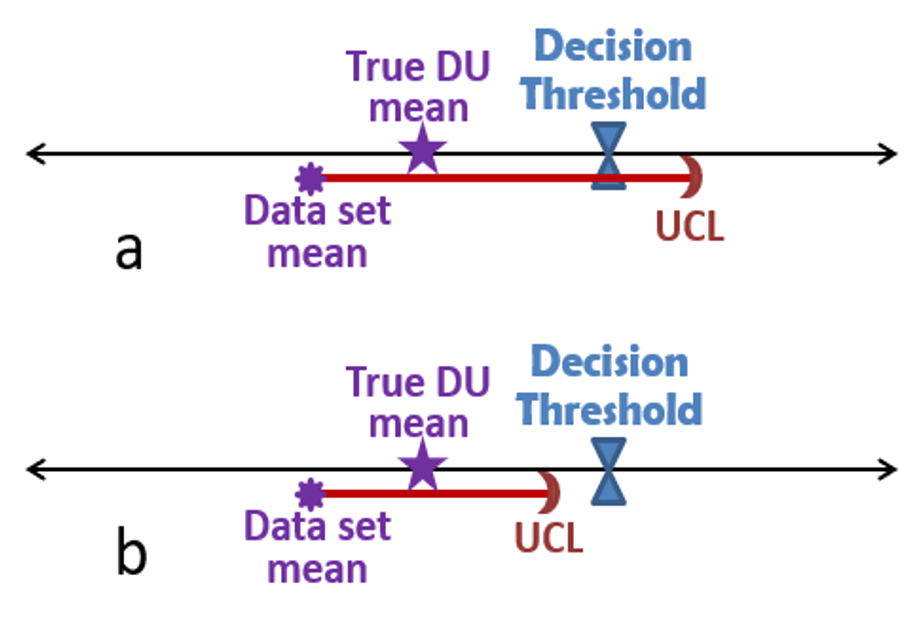

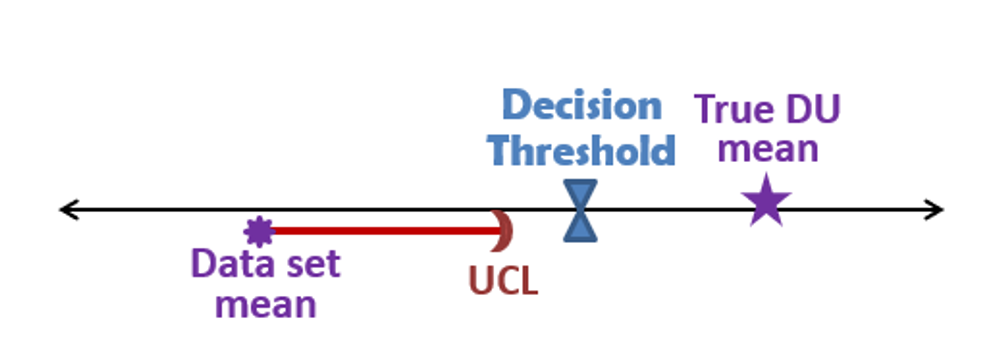

Problem Formulation – Identify decisions needed and develop CSM. The goal of the ISM sampling event is to determine the average concentrations expressed as the 95% UCL of arsenic, lead, and OCPs in surface soil to assess potential future residential risks and ascertain if cumulative risks or hazards exceed the regulatory acceptable points of departure of 1 x 10-6 and 1.0, respectively (see Section 1, where 95% UCL is defined, and Section 3.2, which has a discussion on 95% UCL).

For risk-related problems, problem formation will almost always entail the following sequence of steps to generate the preliminary CSM and potentially complete exposure pathways:

- identify potential primary source areas/release mechanisms

- identify potential secondary source areas/release mechanisms

- identify media that could be impacted by such a release/migration (exposure media)

- identify receptors, both current and future, that could come into contact with these contaminated media and the exposure routes (ingestion, inhalation, or dermal)

First, generate the preliminary CSM and potentially complete exposure pathways to establish EUs.

- Primary source areas/release mechanisms. The only potential source for Example 2A is the agricultural field, with the release mechanism being the legal broadcast application of OCPs and arsenical pesticides, including lead arsenate. There have been no known releases to the adjacent background area that is upwind from the agricultural field.

Source: ITRC ISM Update Team, 2020.

- Secondary source areas/release mechanisms. The broadcast application of pesticides leads to contaminated soils as a secondary source. Secondary releases of COPCs from surface soil can occur from transport of these non-volatile COPCs in surface soil via wind dispersion and plowing of the agricultural field.

- Exposure media. The exposure media are limited to surface soil (defined as the top 6 in).

- Receptors and routes of exposure. Future residential receptors may be exposed to COPCs in surface soil via incidental ingestion, inhalation of particulates, and dermal contact.

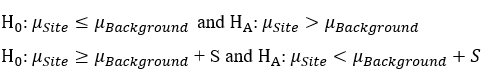

Identify Study Questions – Identify objectives and COPCs. To determine what environmental data are needed to achieve the goals of the ISM investigation, the project team develops the study questions that will guide the sampling and analysis plan in conjunction with the CSM. Example 2A has two study questions; the resulting decision rules are used to develop consensus on ISM results-based actions to help define the data quality needs:

- Study question 1 – Are the average metals concentrations expressed as the 95% UCL in the agricultural field within ambient background concentrations?

- Decision rule 1 – If the 95% UCL soil metals concentrations are within ambient background concentrations, then do not include metals in the risk assessment, if not, include metals as COPCs in the quantitative risk assessment.

- Study question 2 – For each EU in the agricultural field, are the average concentrations expressed as the 95% UCL for each OCP and each metal below risk-based levels of concern?

- Decision rule 2.1 – If OCPs are not detected in surface soil and all metals are identified as within ambient background concentrations, then no further action, if not, proceed to decision rule 2.2.

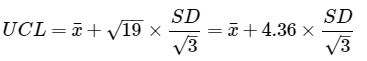

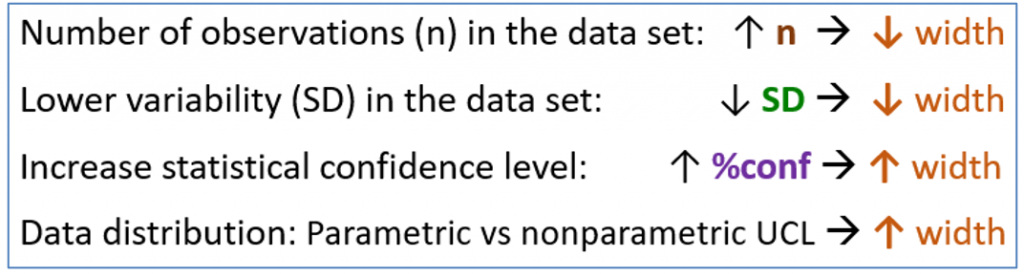

- Decision rule 2.2 – If replicate RSDs of risk-driving COPCs exceed measurement quality objectives (MQOs), then further investigation, if not, calculate cumulative risks and hazards and proceed to decision rule 2.3.

- Decision rule 2.3 – If cumulative risks and hazards in any EU are above the regulatory acceptable points of departure for risk (1 x 10-6) and hazard (1.0), then for all EUs further action or investigation, if not, no further action.

Identify Information Inputs – Specify study goal data needs. Two information inputs are identified for Example 2A.

- Surface soil sampling and analysis are needed for OCPs and metals with detection limits below risk-based screening levels from ¼-acre EUs.

- Define MQOs, particularly the acceptable range of replicate RSDs. For example set 2A, an RSD of less than 20% is established as the MQO.

Define Spatial and Temporal Study Boundaries – Define DUs. The study area’s lateral boundary is the 30 acres of agricultural land that is proposed for residential development. The vertical boundary is defined as surface soil based on the release mechanism of broadcast pesticide application and the relatively low mobility of OCP pesticides in soil. Because the study questions are risk-based, the DUs are defined by the anticipated exposure areas, described above as ¼-acre EUs.

| Extrapolating to unsampled DUs within a large study area can be achieved in a scientifically defensible manner with ISM. |

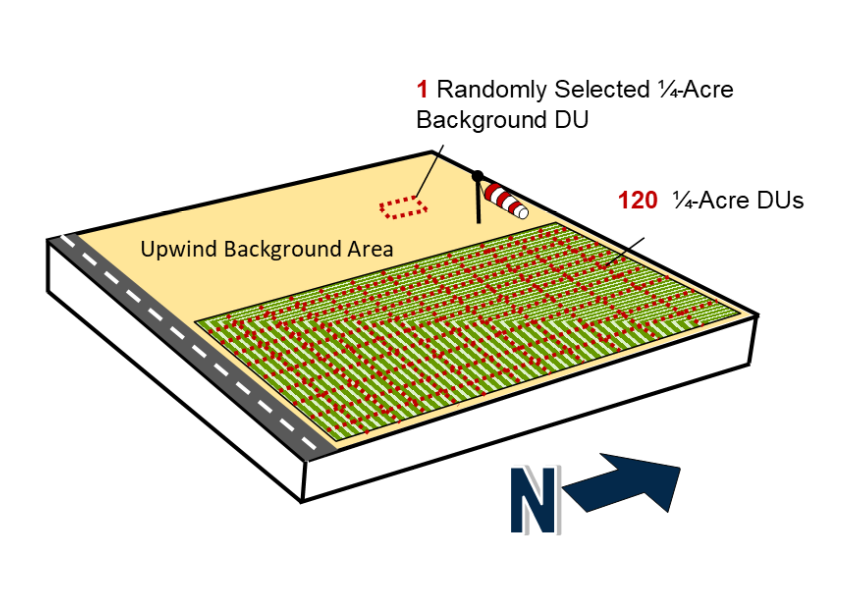

Extrapolating to unsampled DUs within a large study area can be achieved in a scientifically defensible manner with ISM. Section 3.1.3 and Section 3.1.4 touch on the concept of sampling a subset of SUs within a large DU. This concept is applied in this example to extrapolating conclusions from a subset of sampled DUs to a larger group of CSM-equivalent DUs, as described in more detail in Section 3.2.8.2. The utility of a pilot study for large areas to assess variability and obtain preliminary COPC concentration ranges is typically very beneficial. In this example, low variance among replicates is anticipated based on the CSM of broadcast pesticide applications, and the 30-acre study area is divided into 120 contiguous equally-sized DUs of a ¼ acre (Figure 3-9b) based on the residential lot size in the area. A subset of DUs can be randomly selected (such as with a random number generator) for sampling. Alternatively, a modified random selection process can be used to ensure that all regions of the 30-acre area are sampled in a proportional manner to reduce the uncertainty from extrapolation if the subset of DUs identified for sampling are grouped too closely together. For modified random selection, the 120 DUs would be allotted into spatial groups and equal numbers of DUs for sampling selected from each group.

Source: ITRC ISM Update Team, 2020.

Planning for the number of DUs to sample as the subset of the 120 DUs is a decision that involves all stakeholders and most critically must be sufficient to support the ultimate decisions made based on the extrapolated contaminant average concentration data. Example 2A decisions will be risk-based decisions, and considerations for addressing uncertainty in the risk estimates and risk-based decision errors should err on the side of protecting public health. Generally, practitioners would rather make the mistake of remediating a site that is already clean than make the mistake of not remediating a site that is contaminated.

As presented in Section 3.2.8.2, based on the statistical equations for upper tolerance limits (UTLs) using nonparametric methods, when there are a large number of DUs (more than 100), a subset of at least 59 DUs must be sampled to conclude that at least 95% of the site area is in compliance with 95% confidence (0.05 = α). From a practical standpoint, confidence in making correct decisions about a large-area site will increase as the proportion of the site area included in ISM sampling increases. Section 3.2.8.2 describes the statistical basis that supports sampling designs that can achieve specified decision error rates, given properties of the data and key assumptions. Based on these numerical simulation studies and statistics commonly applied in environmental investigations, there are conditions when compliance can be achieved by sampling a small portion of the study area (for example, 10% to 30%). The decision regarding the number of small-area DUs to sample should be based on spatial coverage (representativeness) of the site area, the likely degree of variability in soil concentrations across the site area, and the likely proximity of soil concentrations to ALs.

For Example 2A, the project team agrees to determine which DUs to sample using modified random selection and to sample 20 DUs (17% of the total site area, or 5 acres from the 30 acres). This decision to sample 20 DUs by the project team is informed by similar nearby study areas with thorough investigations that had a low CV (<1) and COPC concentrations between 10- and 100-fold lower than risk-based screening levels for all DUs. Therefore, sampling 17% of the site area, or 20 DUs, should be sufficient to avoid a high rate of false compliance decisions while achieving cost savings relative to sampling 59 DUs. Furthermore, the project team agrees that if the CSM assumptions are proven incorrect with either (1) high RSD between replicates or (2) high variability among DUs, then further investigation will ensue with sampling of additional DUs and/or sampling with more increments per DU, rather than extrapolation of the results to the remaining 100 DUs. The anticipated COPC concentrations (that is, 95% UCL to AL ratio of 0.01 to 0.1) plus these two caveats help reduce the uncertainty in drawing conclusions from sampled DUs to other DUs at the site.

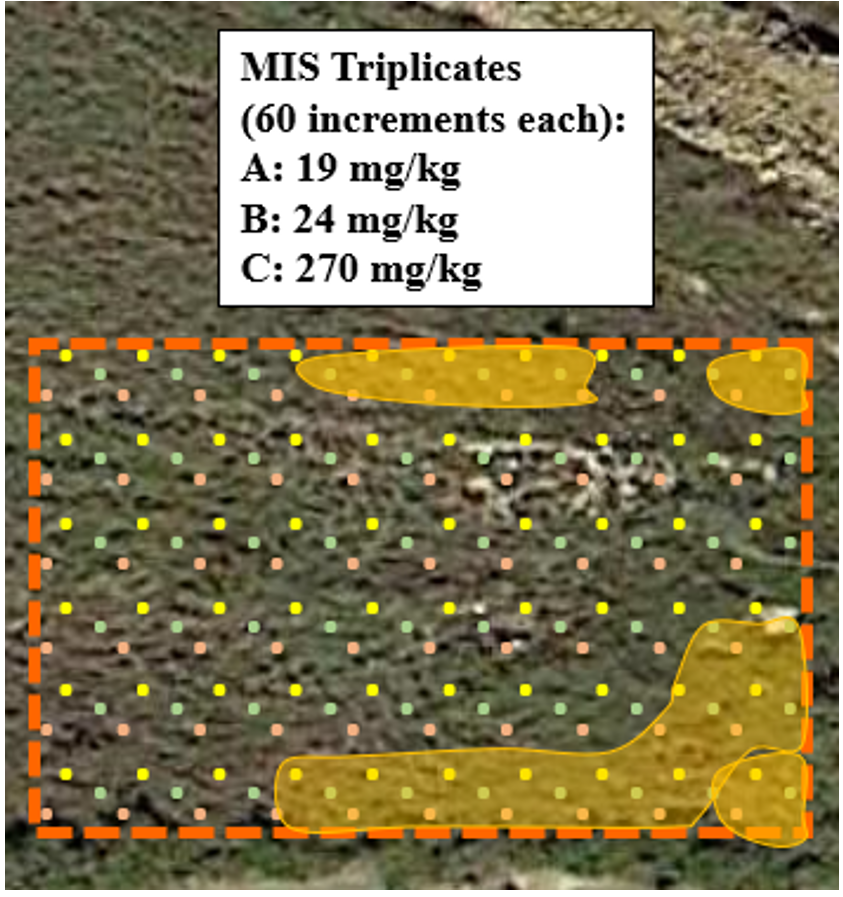

Planning by the project team for the number of increments to sample should consider multiple factors. ISM applies soil science and Gy’s theory to reduce soil sampling heterogeneity and thereby decrease variability in soil contaminant concentrations among increments and replicates. ISM variability can be reduced by increasing the number of increments collected for an ISM replicate, as described in Section 2. Some of the factors that contribute to heterogeneity in soil contaminant concentrations are taken into consideration when establishing DU boundaries, such as the location of the primary source and secondary or tertiary sources and the soil depths of interest to answering the project team’s study questions. Some key factors to consider in deciding on the number of increments in systematic planning are the primary sources and the physical phases of the primary sources (solid or liquid), as well as physical (solid or liquid phase) and chemical properties (water solubility and lipophilicity) that affect COPC fate and transport. The effect of these variables on the heterogeneity in soil contaminant concentrations and the number of increments that should be collected within a DU are illustrated in Figure 3-4. A minimum of 30 increments should be used for each ISM sample or replicate – up to 100 increments may be necessary for some sources/COPCs. The minimum of 30 increments is based on statistical simulations and over a decade of practitioner experience (see Section 2). For certain source types and chemical classes (such as munition residues, metals at small-arms firing ranges, paint chips, ash with dioxins/furans, polynuclear aromatic hydrocarbons [PAHs], and PCBs in transformer oil), more than 30 increments may be necessary due to the highly heterogeneous way these contaminants can be distributed in soil. Case Study 3 in Appendix A demonstrated that 50 increments were insufficient for benzo(a)pyrene (BaP) from a landfill source. Case Study 2 (Clausen et al. 2018a) in Appendix A investigated various numbers of increments (5, 10, 20, 30, 50 100, and 200) to determine how the number of increments affect data quality and concluded ISM samples with 100 increments were appropriate. A field investigation on a diverse set of sources and COPCs from three different sites was undertaken by Brewer et al (Brewer, Peard, and Heskett 2016), which concluded that the magnitude of variability depends in part on the contaminant type and the nature of the release. The sites were (A) a former manufacturer of arsenic-treated ceiling and wall boards, (B) a former municipal incinerator, and (C) a former radio broadcasting station with releases of arsenic, lead, and PCBs in oil, respectively. Variability was well managed for arsenic (site A) and lead (site B), where the use of 54 increments each resulted in RSDs of 6.5% and 20%, respectively. The concentration of PCBs from transformer oil (site C) was so heterogeneous that even in a very small DU, 60 increments were not enough to address the distributional heterogeneity with an RSD of 138%.

For Example 2A, the project team decides to collect three replicates of 50 increments within each of the 20 DUs. Although 30 increments may be sufficient for broadcast application of water-based pesticides that contain arsenic or lead, the use of 50 increments per ISM replicate is decided by the project team to increase the ISM replicate mass because OCPs are hydrophobic (having low water solubility) and have been demonstrated to have relatively large small-scale variability (leading to a higher degree of variability among the increments) at a nearby agricultural field. Table 3-1 presents the variables considered by the project team to determine the number of increments per DU in the agricultural field for Example 2A.

Table 3-1. Variables considered in determining the number of increments per DU for Example 2.

Source: ITRC ISM Update Team, 2020.

| Area | Source(s) | COPCs | # Increments | Rationale |

| Agricultural Field | Pesticides application (lead arsenate) | Arsenic | 30 | Water-based pesticides |

| Pesticides application (OCPs) | OCPs | 50 | Hydrophobic COPCs | |

| Pesticide Mixing | Spills or ground surface disposal | Full suite of pesticides and petroleum fractions | 70 | Brewer et al., 2016 (PCBs n > 60) n = 70 to 100 |

| Residential Area: Current (Example 2B-1) | Paint chips | Metals (lead) | 80 | Hawaii DOH, 2016 (n > 75) |

| Termiticides | OCPs | 80 | Brewer et al., 2016 (PCBs n > 60) n = 70 to 100 |

|

| Pesticide drift (lead arsenate and OCPs) | Arsenic and OCPs | 80 | Efficiency of one sampling strategy Unknown heterogeneity (n = 50, Hawaii DOH, 2016) | |

| Residential Area: Future (Example 2B-2) | Paint chips | Metals (lead) | 80 | Hawaii DOH, 2016 (n > 75) |

| Termiticides | OCPs | 80 | Brewer et al., 2016 (PCBs n > 60) n = 70 to 100 |

|

| Pesticide drift (lead arsenate and OCPs) | Arsenic and OCPs | 50 | Efficiency DUs 1 to 4 for one sampling strategy Unknown heterogeneity (n = 50, Hawaii DOH, 2016) |

|

| Dump Area Debris | Tires, 55-gallon drums of unknown contents, ash, oil-stained soil, debris | Metals, OCPs, full suite of pesticides, SVOCs, PAHs, dioxins/furans, petroleum fractions | 80 | Brewer et al., 2016 (PCBs n > 60, ash lead n = 50 to 60) Sources and COPCs suggest high heterogeneity n = 70 to 100 |

The three replicate locations are established by using systematic random placement as per Section 3.1.5.4 (Figure 3-5), with each DU divided into 50 equally-sized grids. For systematic random selection, after the initial three replicate location is randomly selected, this placement is applied to the remaining 49 grid cells for that DU. If the increments unevenly represent the furrows or crests of the rows, then discuss with the regulatory team the use of a modified systematic random selection process for placement of increments. Similarly, contingencies may be needed if increment locations are inaccessible due to the physical presence of crop vegetation (see Section 4).

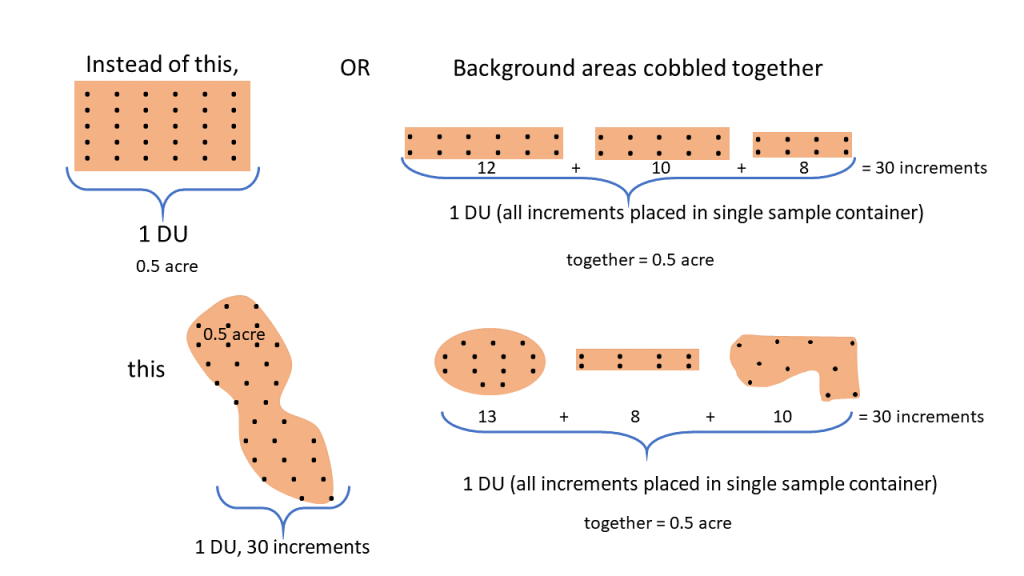

Defining background DUs is a concept important for both risk assessment and risk management. Background soil concentrations for native metals and ubiquitous anthropogenic chemicals such as dioxins and PAHs are often used in risk assessment (see Section 8.4) to establish cleanup goals or verify remedial actions. Background DUs need to be of comparable area and depth (volume) as the site DUs and from a geologically similar area with no known or suspected sources of contamination. ISM background samples need to be of equal sample support – that is, both the field increment volume and number of increments and laboratory subsampling protocols need to match those of the site ISM samples. Ideally, ISM background samples should be comparable to site data and share the attributes of:

- same DU size (volume)

- same sample range of depths

- same soil type (such as sand or loam)

- same volume of soil per increment

- same number of increments and replicates in the DU

- same increment density (such as 30 increments per ½ acre; see Figure 3-10)

- same field methods

- same analytical methods

In situations where nearby background regions are difficult to find, areas equal to the site’s DUs from irregularly shaped regions or a combination of discontinuous regions are alternatives (see Figure 3-10 with an example of a background 0.5-acre DU). If different soil types exist within the site, or if soils are derived from different parent material, then multiple ISM background datasets may be needed with one for each soil type.

Source: ITRC ISM Update Team, 2020.

The upwind background area in Figure 3-9a is ideal to avoid any potential cross-contamination from wind deposition during pesticide applications, plowing activities, or windy events. The background sampling must consist of the same number of replicates per DU as the study area, which in Example 2A is three replicates. For Example 2A, one ¼-acre DU is selected from the background area to collect the three replicates of 50 increments each (Figure 3-9b). Similar to the agricultural area DUs described above, the background three replicate locations are established using systematic random placement with the background DU divided into 50 equally-sized areas.